Device Passthrough¶

A critical part of virtualization is virtualizing devices: exposing all aspects of a device including its I/O, interrupts, DMA, and configuration. There are three typical device virtualization methods: emulation, para-virtualization, and passthrough. All emulation, para-virtualization and passthrough are used in ACRN project. Device emulation is discussed in I/O Emulation high-level design, para-virtualization is discussed in Virtio devices high-level design and device passthrough will be discussed here.

In the ACRN project, device emulation means emulating all existing hardware resource through a software component device model running in the Service OS (SOS). Device emulation must maintain the same SW interface as a native device, providing transparency to the VM software stack. Passthrough implemented in hypervisor assigns a physical device to a VM so the VM can access the hardware device directly with minimal (if any) VMM involvement.

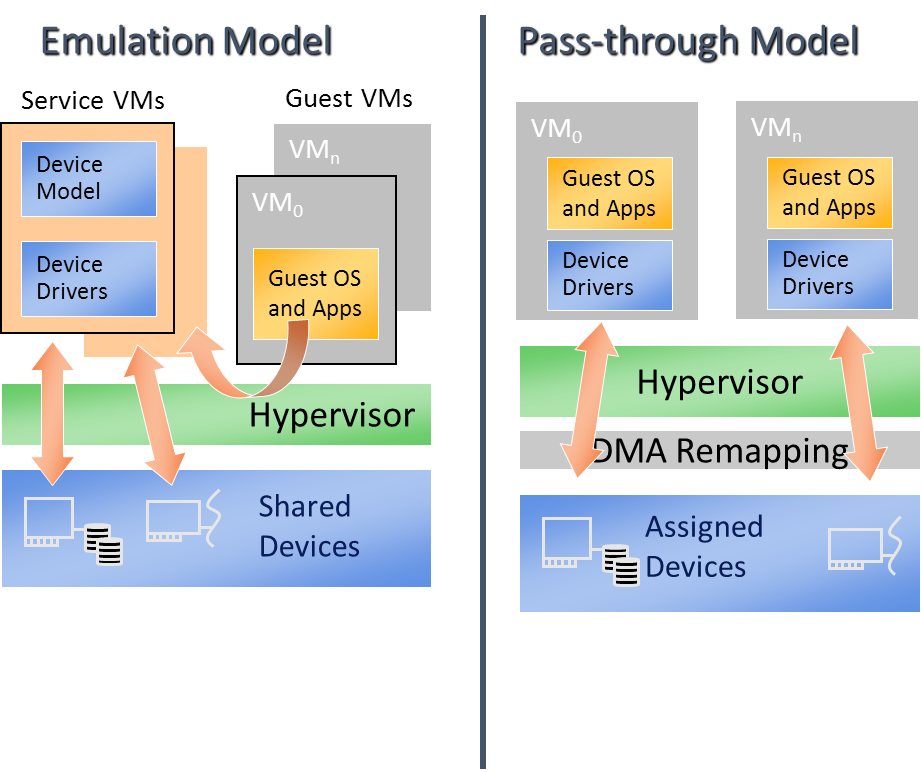

The difference between device emulation and passthrough is shown in Figure 183. You can notice device emulation has a longer access path which causes worse performance compared with passthrough. Passthrough can deliver near-native performance, but can’t support device sharing.

Figure 183 Difference between Emulation and passthrough

Passthrough in the hypervisor provides the following functionalities to allow VM to access PCI devices directly:

- VT-d DMA Remapping for PCI devices: hypervisor will setup DMA remapping during VM initialization phase.

- VT-d Interrupt-remapping for PCI devices: hypervisor will enable VT-d interrupt-remapping for PCI devices for security considerations.

- MMIO Remapping between virtual and physical BAR

- Device configuration Emulation

- Remapping interrupts for PCI devices

- ACPI configuration Virtualization

- GSI sharing violation check

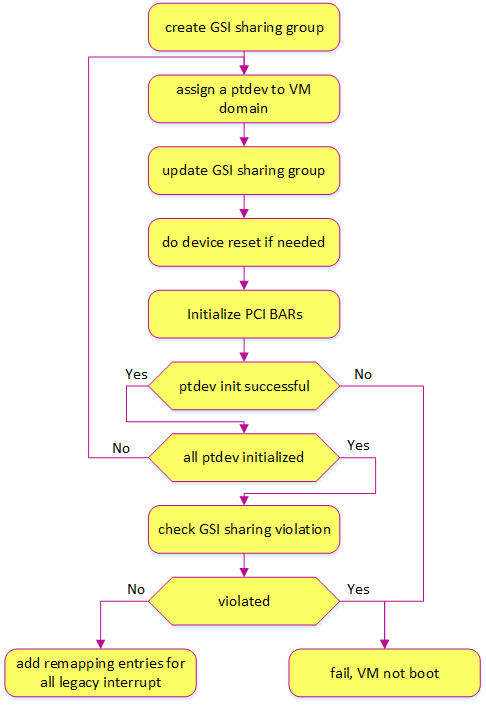

The following diagram details passthrough initialization control flow in ACRN for post-launched VM:

Figure 184 Passthrough devices initialization control flow

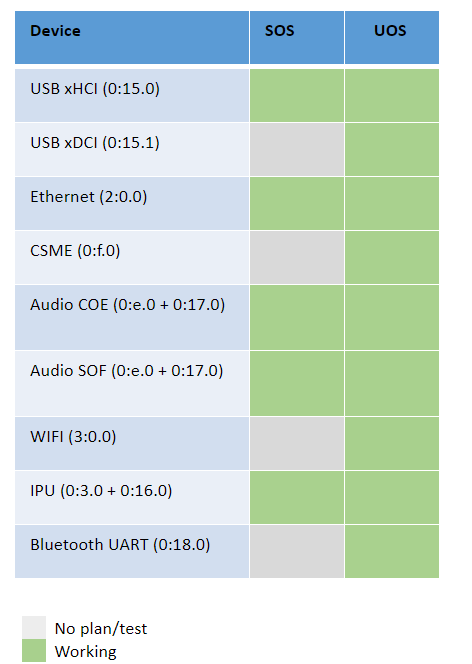

Passthrough Device status¶

Most common devices on supported platforms are enabled for passthrough, as detailed here:

Figure 185 Passthrough Device Status

Owner of Passthrough Devices¶

ACRN hypervisor will do PCI enumeration to discover the PCI devices on the platform. According to the hypervisor/VM configurations, the owner of these PCI devices can be one the following 4 cases:

- Hypervisor: hypervisor uses UART device as the console in debug version for debug purpose, so the UART device is owned by hypervisor and is not visible to any VM. For now, UART is the only pci device could be owned by hypervisor.

- Pre-launched VM: The passthrough devices will be used in a pre-launched VM is pre-defined in VM configuration. These passthrough devices are owned by the pre-launched VM after the VM is created. These devices will not be removed from the pre-launched VM. There could be pre-launched VM(s) in logical partition mode and hybrid mode.

- Service VM: All the passthrough devices except these described above (owned by hypervisor or pre-launched VM(s)) are assigned to Service VM. And some of these devices can be assigned to a post-launched VM according to the passthrough device list specified in the parameters of the ACRN DM.

- Post-launched VM: A list of passthrough devices can be specified in the parameters of the ACRN DM. When creating a post-launched VM, these specified devices will be moved from Service VM domain to the post-launched VM domain. After the post-launched VM is powered-off, these devices will be moved back to Service VM domain.

VT-d DMA Remapping¶

To enable passthrough, for VM DMA access the VM can only support GPA, while physical DMA requires HPA. One work-around is building identity mapping so that GPA is equal to HPA, but this is not recommended as some VM don’t support relocation well. To address this issue, Intel introduces VT-d in the chipset to add one remapping engine to translate GPA to HPA for DMA operations.

Each VT-d engine (DMAR Unit), maintains a remapping structure similar to a page table with device BDF (Bus/Dev/Func) as input and final page table for GPA/HPA translation as output. The GPA/HPA translation page table is similar to a normal multi-level page table.

VM DMA depends on Intel VT-d to do the translation from GPA to HPA, so we need to enable VT-d IOMMU engine in ACRN before we can passthrough any device. Service VM in ACRN is a VM running in non-root mode which also depends on VT-d to access a device. In Service VM DMA remapping engine settings, GPA is equal to HPA.

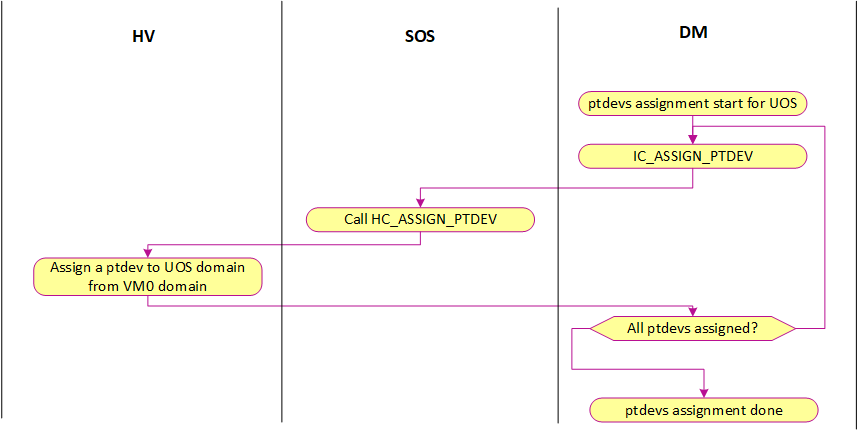

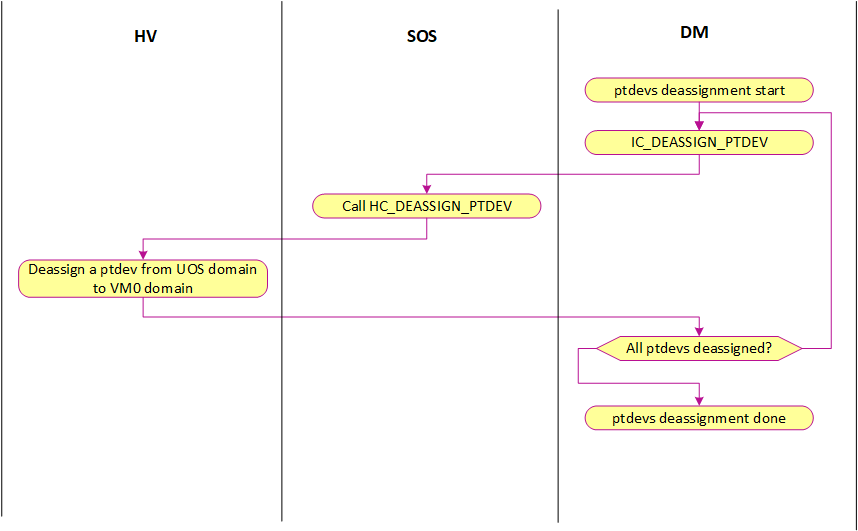

ACRN hypervisor checks DMA-Remapping Hardware unit Definition (DRHD) in host DMAR ACPI table to get basic info, then sets up each DMAR unit. For simplicity, ACRN reuses EPT table as the translation table in DMAR unit for each passthrough device. The control flow of assigning and de-assigning a passthrough device to/from a post-launched VM is shown in the following figures:

Figure 186 ptdev assignment control flow

Figure 187 ptdev de-assignment control flow

VT-d Interrupt-remapping¶

The VT-d interrupt-remapping architecture enables system software to control and censor external interrupt requests generated by all sources including those from interrupt controllers (I/OxAPICs), MSI/MSI-X capable devices including endpoints, root-ports and Root-Complex integrated end-points. ACRN forces to enabled VT-d interrupt-remapping feature for security reasons. If the VT-d hardware doesn’t support interrupt-remapping, then ACRN will refuse to boot VMs. VT-d Interrupt-remapping is NOT related to the translation from physical interrupt to virtual interrupt or vice versa. The term VT-d interrupt-remapping remaps the interrupt index in the VT-d interrupt-remapping table to the physical interrupt vector after checking the external interrupt request is valid. Translation physical vector to virtual vector is still needed to be done by hypervisor, which is also described in the below section Interrupt Remapping.

MMIO Remapping¶

For PCI MMIO BAR, hypervisor builds EPT mapping between virtual BAR and physical BAR, then VM can access MMIO directly. There is one exception, MSI-X table is also in a MMIO BAR. Hypervisor needs to trap the accesses to MSI-X table. So the page(s) having MSI-X table should not be accessed by guest directly. EPT mapping is not built for these pages having MSI-X table.

Device configuration emulation¶

The PCI configuration space can be accessed by a PCI-compatible Configuration Mechanism (IO port 0xCF8/CFC) and the PCI Express Enhanced Configuration Access Mechanism (PCI MMCONFIG). The ACRN hypervisor traps this PCI configuration space access and emulate it. Refer to Split Device Model for details.

MSI-X table emulation¶

VM accesses to MSI-X table should be trapped so that hypervisor has the information to map the virtual vector and physical vector. EPT mapping should be skipped for the 4KB pages having MSI-X table.

There are three situations for the emulation of MSI-X table:

- Service VM: accesses to MSI-X table are handled by HV MMIO handler (4KB adjusted up and down). HV will remap interrupts.

- Post-launched VM: accesses to MSI-X Tables are handled by DM MMIO handler (4KB adjusted up and down) and when DM (Service VM) writes to the table, it will be intercepted by HV MMIO handler and HV will remap interrupts.

- Pre-launched VM: Writes to MMIO region in MSI-X Table BAR handled by HV MMIO handler. If the offset falls within the MSI-X table (offset, offset+tables_size), HV remaps interrupts.

Interrupt Remapping¶

When the physical interrupt of a passthrough device happens, hypervisor has

to distribute it to the relevant VM according to interrupt remapping

relationships. The structure ptirq_remapping_info is used to define

the subordination relation between physical interrupt and VM, the

virtual destination, etc. See the following figure for details:

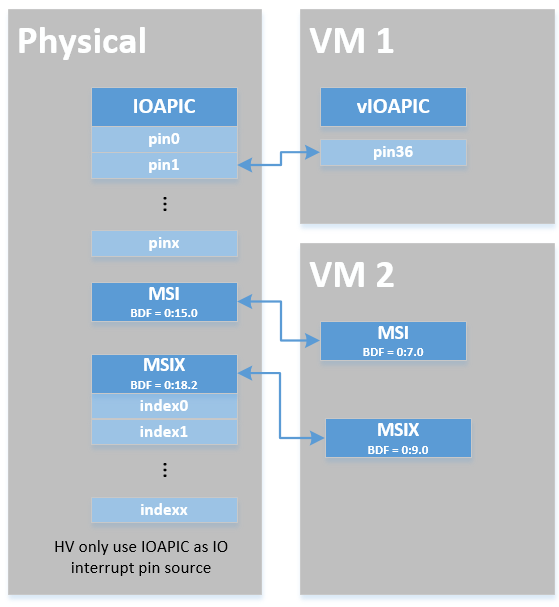

Figure 188 Remapping of physical interrupts

There are two different types of interrupt source: IOAPIC and MSI. The hypervisor will record different information for interrupt distribution: physical and virtual IOAPIC pin for IOAPIC source, physical and virtual BDF and other info for MSI source.

Service VM passthrough is also in the scope of interrupt remapping which is done on-demand rather than on hypervisor initialization.

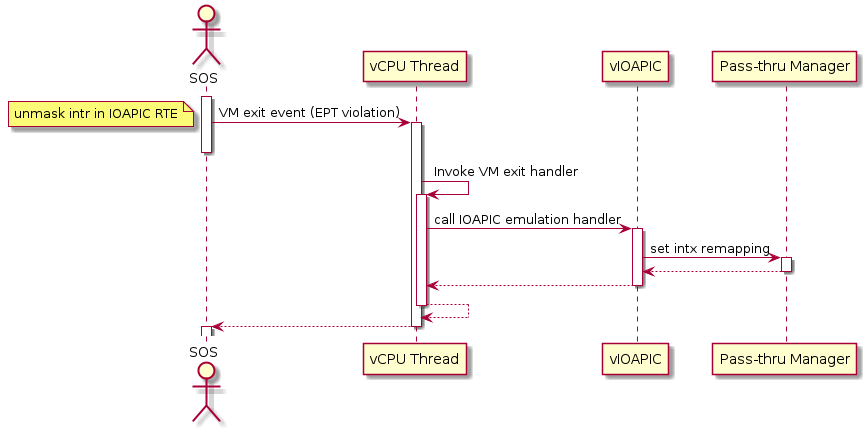

Figure 189 Initialization of remapping of virtual IOAPIC interrupts for Service VM

Figure 189 above illustrates how remapping of (virtual) IOAPIC interrupts are remapped for Service VM. VM exit occurs whenever Service VM tries to unmask an interrupt in (virtual) IOAPIC by writing to the Redirection Table Entry (or RTE). The hypervisor then invokes the IOAPIC emulation handler (refer to I/O Emulation high-level design for details on I/O emulation) which calls APIs to set up a remapping for the to-be-unmasked interrupt.

Remapping of (virtual) PIC interrupts are set up in a similar sequence:

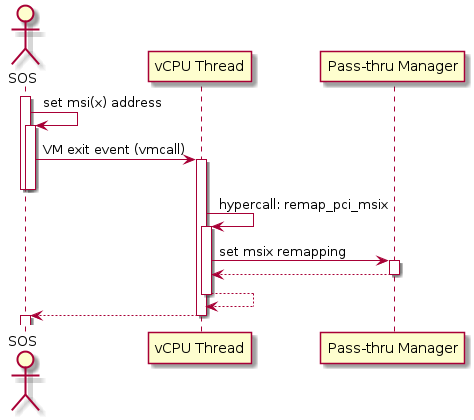

Figure 190 Initialization of remapping of virtual MSI for Service VM

This figure illustrates how mappings of MSI or MSIX are set up for Service VM. Service VM is responsible for issuing a hypercall to notify the hypervisor before it configures the PCI configuration space to enable an MSI. The hypervisor takes this opportunity to set up a remapping for the given MSI or MSIX before it is actually enabled by Service VM.

When the UOS needs to access the physical device by passthrough, it uses the following steps:

- UOS gets a virtual interrupt

- VM exit happens and the trapped vCPU is the target where the interrupt will be injected.

- Hypervisor will handle the interrupt and translate the vector according to ptirq_remapping_info.

- Hypervisor delivers the interrupt to UOS.

When the Service VM needs to use the physical device, the passthrough is also active because the Service VM is the first VM. The detail steps are:

- Service VM get all physical interrupts. It assigns different interrupts for different VMs during initialization and reassign when a VM is created or deleted.

- When physical interrupt is trapped, an exception will happen after VMCS has been set.

- Hypervisor will handle the VM exit issue according to ptirq_remapping_info and translates the vector.

- The interrupt will be injected the same as a virtual interrupt.

ACPI Virtualization¶

ACPI virtualization is designed in ACRN with these assumptions:

- HV has no knowledge of ACPI,

- Service VM owns all physical ACPI resources,

- UOS sees virtual ACPI resources emulated by device model.

Some passthrough devices require physical ACPI table entry for initialization. The device model will create such device entry based on the physical one according to vendor ID and device ID. Virtualization is implemented in Service VM device model and not in scope of the hypervisor. For pre-launched VM, ACRN hypervisor doesn’t support the ACPI virtualization, so devices relying on ACPI table are not supported.

GSI Sharing Violation Check¶

All the PCI devices that are sharing the same GSI should be assigned to the same VM to avoid physical GSI sharing between multiple VMs. In logical partition mode or hybrid mode, the PCI devices assigned to pre-launched VM is statically pre-defined. Developers should take care not to violate the rule. For post-launched VM, devices that don’t support MSI, ACRN DM puts the devices sharing the same GSI pin to a GSI sharing group. The devices in the same group should be assigned together to the current VM, otherwise, none of them should be assigned to the current VM. A device that violates the rule will be rejected to be passed-through. The checking logic is implemented in Device Model and not in scope of hypervisor. The platform GSI information is in devicemodel/hw/pci/platform_gsi_info.c for limited platform (currently, only APL MRB). For other platforms, the platform specific GSI information should be added to activate the checking of GSI sharing violation.

Data structures and interfaces¶

The following APIs are common APIs provided to initialize interrupt remapping for VMs:

-

int32_t

ptirq_intx_pin_remap(struct acrn_vm *vm, uint32_t virt_pin, enum intx_ctlr vpin_ctlr)¶ INTx remapping for passthrough device.

Set up the remapping of the given virtual pin for the given vm. This is the main entry for PCI/Legacy device assignment with INTx, calling from vIOAPIC or vPIC.

- Return

- 0: on success

-ENODEV:- for SOS, the entry already be held by others

- for UOS, no pre-hold mapping found.

- Preconditions

- vm != NULL

- Parameters

vm: pointer to acrn_vmvirt_pin: virtual pin number associated with the passthrough devicevpin_ctlr: INTX_CTLR_IOAPIC or INTX_CTLR_PIC

-

int32_t

ptirq_prepare_msix_remap(struct acrn_vm *vm, uint16_t virt_bdf, uint16_t phys_bdf, uint16_t entry_nr, struct ptirq_msi_info *info)¶ MSI/MSI-x remapping for passthrough device.

Main entry for PCI device assignment with MSI and MSI-X. MSI can up to 8 vectors and MSI-X can up to 1024 Vectors.

- Return

- 0: on success

-ENODEV:- for SOS, the entry already be held by others

- for UOS, no pre-hold mapping found.

- Preconditions

- vm != NULL

- Preconditions

- info != NULL

- Parameters

vm: pointer to acrn_vmvirt_bdf: virtual bdf associated with the passthrough devicephys_bdf: virtual bdf associated with the passthrough deviceentry_nr: indicate coming vectors, entry_nr = 0 means first vectorinfo: structure used for MSI/MSI-x remapping

Post-launched VM needs to pre-allocate interrupt entries during VM initialization. Post-launched VM needs to free interrupt entries during VM de-initialization. The following APIs are provided to pre-allocate/free interrupt entries for post-launched VM:

-

int32_t

ptirq_add_intx_remapping(struct acrn_vm *vm, uint32_t virt_pin, uint32_t phys_pin, bool pic_pin)¶ Add an interrupt remapping entry for INTx as pre-hold mapping.

Except sos_vm, Device Model should call this function to pre-hold ptdev intx The entry is identified by phys_pin, one entry vs. one phys_pin. Currently, one phys_pin can only be held by one pin source (vPIC or vIOAPIC).

- Return

- 0: on success

-EINVAL: invalid virt_pin value-ENODEV: failed to add the remapping entry

- Preconditions

- vm != NULL

- Parameters

vm: pointer to acrn_vmvirt_pin: virtual pin number associated with the passthrough devicephys_pin: physical pin number associated with the passthrough devicepic_pin: true for pic, false for ioapic

-

void

ptirq_remove_intx_remapping(struct acrn_vm *vm, uint32_t virt_pin, bool pic_pin)¶ Remove an interrupt remapping entry for INTx.

Deactivate & remove mapping entry of the given virt_pin for given vm.

- Return

- None

- Preconditions

- vm != NULL

- Parameters

vm: pointer to acrn_vmvirt_pin: virtual pin number associated with the passthrough devicepic_pin: true for pic, false for ioapic

-

void

ptirq_remove_msix_remapping(const struct acrn_vm *vm, uint16_t virt_bdf, uint32_t vector_count)¶ Remove interrupt remapping entry/entries for MSI/MSI-x.

Remove the mapping of given number of vectors of the given virtual BDF for the given vm.

- Return

- None

- Preconditions

- vm != NULL

- Parameters

vm: pointer to acrn_vmvirt_bdf: virtual bdf associated with the passthrough devicevector_count: number of vectors

The following APIs are provided to acknowledge a virtual interrupt.

-

void

ptirq_intx_ack(struct acrn_vm *vm, uint32_t virt_pin, enum intx_ctlr vpin_ctlr)¶ Acknowledge a virtual interrupt for passthrough device.

Acknowledge a virtual legacy interrupt for a passthrough device.

- Return

- None

- Preconditions

- vm != NULL

- Parameters

vm: pointer to acrn_vmvirt_pin: virtual pin number associated with the passthrough devicevpin_ctlr: INTX_CTLR_IOAPIC or INTX_CTLR_PIC

The following APIs are provided to handle ptdev interrupt:

-

void

ptdev_init(void)¶ Passthrough device global data structure initialization.

During the hypervisor cpu initialization stage, this function:

- init global spinlock for ptdev (on BSP)

- register SOFTIRQ_PTDEV handler (on BSP)

- init the softirq entry list for each CPU

-

void

ptirq_softirq(uint16_t pcpu_id)¶ Handler of softirq for passthrough device.

When hypervisor receive a physical interrupt from passthrough device, it will enqueue a ptirq entry and raise softirq SOFTIRQ_PTDEV. This function is the handler of the softirq, it handles the interrupt and injects the virtual into VM. The handler is registered by calling ptdev_init during hypervisor initialization.

- Parameters

pcpu_id: physical cpu id of the soft irq

-

struct ptirq_remapping_info *

ptirq_alloc_entry(struct acrn_vm *vm, uint32_t intr_type)¶ Allocate a ptirq_remapping_info entry.

Allocate a ptirq_remapping_info entry for hypervisor to store the remapping information. The total number of the entries is statically defined as CONFIG_MAX_PT_IRQ_ENTRIES. Appropriate number should be configured on different platforms.

- Parameters

vm: acrn_vm that the entry allocated for.intr_type: interrupt type: PTDEV_INTR_MSI or PTDEV_INTR_INTX

- Return Value

NULL: whenthenumber of entries allocated is CONFIG_MAX_PT_IRQ_ENTRIES!NULL: whenthenumber of entries allocated is less than CONFIG_MAX_PT_IRQ_ENTRIES

-

void

ptirq_release_entry(struct ptirq_remapping_info *entry)¶ Release a ptirq_remapping_info entry.

- Parameters

entry: the ptirq_remapping_info entry to release.

-

void

ptdev_release_all_entries(const struct acrn_vm *vm)¶ Deactivate and release all ptirq entries for a VM.

This function deactivates and releases all ptirq entries for a VM. The function should only be called after the VM is already down.

- Preconditions

- VM is already down

- Parameters

vm: acrn_vm on which the ptirq entries will be released

-

int32_t

ptirq_activate_entry(struct ptirq_remapping_info *entry, uint32_t phys_irq)¶ Activate a irq for the associated passthrough device.

After activating the ptirq entry, the physical interrupt irq of passthrough device will be handled by the handler ptirq_interrupt_handler.

- Parameters

entry: the ptirq_remapping_info entry that will be associated with the physical irq.phys_irq: physical interrupt irq for the entry

- Return Value

success: whenreturnvalue >=0success: whenreturn<0

-

void

ptirq_deactivate_entry(struct ptirq_remapping_info *entry)¶ De-activate a irq for the associated passthrough device.

- Parameters

entry: the ptirq_remapping_info entry that will be de-activated.

-

struct ptirq_remapping_info *

ptirq_dequeue_softirq(uint16_t pcpu_id)¶ Dequeue an entry from per cpu ptdev softirq queue.

Dequeue an entry from the ptdev softirq queue on the specific physical cpu.

- Parameters

pcpu_id: physical cpu id

- Return Value

NULL: whenwhenthe queue is empty!NULL: whenthereis available ptirq_remapping_info entry in the queue

-

uint32_t

ptirq_get_intr_data(const struct acrn_vm *target_vm, uint64_t *buffer, uint32_t buffer_cnt)¶ Get the interrupt information and store to the buffer provided.

- Parameters

target_vm: the VM to get the interrupt information.buffer: the buffer to interrupt information stored to.buffer_cnt: the size of the buffer.

- Return Value

the: actual size the buffer filled with the interrupt information