GVT-g Enabling and Porting Guide¶

Introduction¶

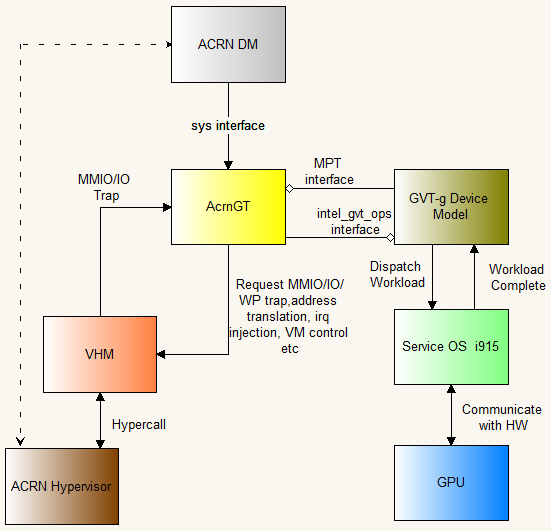

GVT-g is Intel’s open-source GPU virtualization solution, up-streamed to the Linux kernel. Its implementation over KVM is named KVMGT, over Xen is named XenGT, and over ACRN is named AcrnGT. GVT-g can export multiple virtual-GPU (vGPU) instances for a virtual machine (VM) system. A VM can be assigned one instance of a vGPU. The guest OS graphic driver needs only minor modifications to drive the vGPU adapter in a VM. Every vGPU instance adopts the full HW GPU’s acceleration capability for media, 3D rendering, and display.

AcrnGT refers to the glue layer between the ACRN hypervisor and GVT-g core device model. It works as the agent of hypervisor-related services. It is the only layer that must be rewritten when porting GVT-g to other specific hypervisors.

For simplicity, in the rest of this document, the term GVT is used to refer

to the core device model component of GVT-g, specifically corresponding to

gvt.ko when built as a module.

Purpose of This Document¶

This document explains the relationship between components of GVT-g in the ACRN hypervisor, shows how to enable GVT-g on ACRN, and guides developers porting GVT-g to work on other hypervisors.

This document describes:

the overall components of GVT-g

the interaction interface of each component

the core interaction scenarios

APIs of each component interface can be found in the ACRN GVT-g APIs documentation.

Overall Components¶

For the GVT-g solution for the ACRN hypervisor, there are two key modules: AcrnGT and GVT.

- AcrnGT module

Compiled from

drivers/gpu/drm/i915/gvt/acrn_gvt.c, the AcrnGT module acts as a glue layer between the ACRN hypervisor and the interface to the ACRN-DM in user space.AcrnGT is the agent of hypervisor-related services, including I/O trap request, IRQ injection, address translation, VM controls, etc. It also listens to ACRN hypervisor in

acrngt_emulation_thread, and informs the GVT module of I/O traps.It calls into the GVT module’s GVT-g intel_gvt_ops Interface to invoke Device Model’s routines, and receives requests from the GVT module through the AcrnGT Mediated Passthrough (MPT) Interface.

User-space programs, such as ACRN-DM, communicate with AcrnGT through the AcrnGT sysfs Interface by writing to sysfs node

/sys/kernel/gvt/control/create_gvt_instance.This is the only module that must be rewritten when porting to another embedded device hypervisor.

- GVT module

This Device Model service is the central part of all the GVT-g components. It receives workloads from each vGPU, shadows the workloads, and dispatches the workloads to the Service VM’s i915 module to deliver workloads to real hardware. It also emulates the virtual display to each VM.

- VHM module

This is a kernel module that requires an interrupt (vIRQ) number and exposes APIs to external kernel modules such as GVT-g and the virtIO BE service running in kernel space. It exposes a char device node in user space, and interacts only with the DM. The DM routes I/O requests and responses between other modules to and from the VHM module via the char device. DM may use the VHM for hypervisor service (including remote memory map), and VHM may directly service the request such as for the remote memory map or invoking hypercall. It also sends I/O responses to user-space modules, notified by vIRQ injections.

Figure 313 GVT-g components and interfaces¶

Core Scenario Interaction Sequences¶

vGPU Creation Scenario¶

In this scenario, AcrnGT receives a create request from ACRN-DM. It calls

GVT’s GVT-g intel_gvt_ops Interface to inform GVT of vGPU creation. This

interface sets up all vGPU resources such as MMIO, GMA, PVINFO, GTT,

DISPLAY, and Execlists, and calls back to the AcrnGT module through the

AcrnGT Mediated Passthrough (MPT) Interface attach_vgpu. Then, the AcrnGT module sets up an

I/O request server and asks to trap the PCI configure space of the vGPU

(virtual device 0:2:0) via VHM’s APIs. Finally, the AcrnGT module launches

an AcrnGT emulation thread to listen to I/O trap notifications from HVM and

ACRN hypervisor.

vGPU Destroy Scenario¶

In this scenario, AcrnGT receives a destroy request from ACRN-DM. It calls GVT’s GVT-g intel_gvt_ops Interface to inform GVT of the vGPU destroy request, and cleans up all vGPU resources.

vGPU PCI Configure Space Write Scenario¶

ACRN traps the vGPU’s PCI config space write, notifies AcrnGT’s

acrngt_emulation_thread, which calls acrngt_hvm_pio_emulation to

handle all I/O trap notifications. This routine calls the GVT’s

GVT-g intel_gvt_ops Interface emulate_cfg_write to emulate the vGPU PCI

config space write:

If it is BAR0 (GTTMMIO) write, turn on/off GTTMMIO trap, according to the write value.

If it is BAR1 (Aperture) write, maps/unmaps vGPU’s aperture to its corresponding part in the host’s aperture.

Otherwise, write to the virtual PCI configuration space of the vGPU.

PCI Configure Space Read Scenario¶

The call sequence is almost the same as in the write scenario above, but

instead it calls the GVT’s GVT-g intel_gvt_ops Interface

emulate_cfg_read to emulate the vGPU PCI config space read.

GGTT Read/Write Scenario¶

GGTT’s trap is set up in the PCI configure space write scenario above.

MMIO Read/Write Scenario¶

MMIO’s trap is set up in the PCI configure space write scenario above.

PPGTT Write-Protection Page Set/Unset Scenario¶

The PPGTT write-protection page is set by calling acrn_ioreq_add_iorange

with range type as REQ_WP and trapping its write to the device model

while allowing read without trap.

PPGTT write-protection page is unset by calling acrn_ioreq_del_range.

PPGTT Write-Protection Page Write¶

In the VHM module, ioreq for PPGTT WP and MMIO trap is the same. It will

also be trapped into the routine intel_vgpu_emulate_mmio_write().

API Details¶

APIs of each component interface can be found in the ACRN GVT-g APIs documentation.