Enable S5 in ACRN¶

Introduction¶

S5 is one of the ACPI sleep states that refers to the system being shut down (although some power may still be supplied to certain devices). In this document, S5 means the function to shut down the User VMs, Service VM, the hypervisor, and the hardware. In most cases, directly powering off a computer system is not advisable because it can damage some components. It can cause corruption and put the system in an unknown or unstable state. On ACRN, the User VM must be shut down before powering off the Service VM. Especially for some use cases, where User VMs could be used in industrial control or other high safety requirement environment, a graceful system shutdown such as the ACRN S5 function is required.

S5 Architecture¶

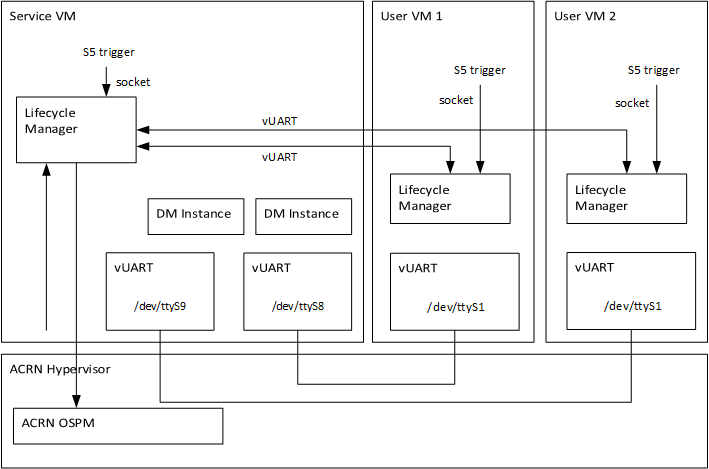

ACRN provides a mechanism to trigger the S5 state transition throughout the system. It uses a vUART channel to communicate between the Service VM and User VMs. The diagram below shows the overall architecture:

Figure 18 S5 Overall Architecture¶

vUART channel:

The User VM’s serial port device (/dev/ttySn) is emulated in the

hypervisor. The channel from the Service VM to the User VM:

![digraph G {

node [shape=plaintext fontsize=12];

rankdir=LR;

bgcolor="transparent";

"Service VM:/dev/ttyS8" -> "ACRN hypervisor" -> "User VM:/dev/ttyS1" [arrowsize=.5];

}](../_images/graphviz-05e883f8b8e2ee49ea6e8c5059b34078354b1ece.png)

Lifecycle Manager Overview¶

As part of the S5 reference design, a Lifecycle Manager daemon (life_mngr in

Linux, life_mngr_win.exe in Windows) runs in the Service VM and User VMs to

implement S5. You can use the s5_trigger_linux.py or

s5_trigger_win.py script to initialize a system S5 in the Service VM or User

VMs. The Lifecycle Manager in the Service VM and User VMs wait for the system S5

request on the local socket port.

Initiate a System S5 from within a User VM (e.g., HMI)¶

As shown in Figure 18, a request to the Service VM initiates the

shutdown flow. This request could come from a User VM, most likely the human

machine interface (HMI) running Windows or Linux. When a human operator

initiates the flow by running s5_trigger_linux.py or s5_trigger_win.py,

the Lifecycle Manager (life_mngr) running in that User VM sends the system

S5 request via the vUART to the Lifecycle Manager in the Service VM which in

turn acknowledges the request. The Lifecycle Manager in the Service VM sends a

poweroff_cmd request to each User VM. When the Lifecycle Manager in a User

VM receives the poweroff_cmd request, it sends ack_poweroff to the

Service VM; then it shuts down the User VM. If a User VM is not ready to shut

down, it can ignore the poweroff_cmd request.

Note

The User VM needs to be authorized to be able to request a system S5.

This is achieved by configuring ALLOW_TRIGGER_S5 in the Lifecycle

Manager service configuration /etc/life_mngr.conf in the Service VM.

Only one User VM in the system can be configured to request a shutdown. If

this configuration is wrong, the Lifecycle Manager of the Service VM rejects

the system S5 request from the User VM. The following error message is

recorded in the Lifecycle Manager log /var/log/life_mngr.log of the

Service VM: The user VM is not allowed to trigger system shutdown.

Initiate a System S5 within the Service VM¶

On the Service VM side, it uses the s5_trigger_linux.py to trigger the

system S5 flow. Then, the Lifecycle Manager in the Service VM sends a

poweroff_cmd request to the Lifecycle Manager in each User VM through the

vUART channel. When the User VM receives this request, it sends an

ack_poweroff to the Lifecycle Manager in the Service VM. The Service VM

checks whether the User VMs shut down successfully or not, and decides when to

shut itself down.

Note

The Service VM is always allowed to trigger system S5 by default.

Enable S5¶

Configure communication vUARTs for the Service VM and User VMs:

Add these lines in the hypervisor scenario XML file manually:

Example:

/* VM0 */ <vm_type>SERVICE_VM</vm_type> ... <legacy_vuart id="1"> <type>VUART_LEGACY_PIO</type> <base>CONFIG_COM_BASE</base> <irq>0</irq> <target_vm_id>1</target_vm_id> <target_uart_id>1</target_uart_id> </legacy_vuart> <legacy_vuart id="2"> <type>VUART_LEGACY_PIO</type> <base>CONFIG_COM_BASE</base> <irq>0</irq> <target_vm_id>2</target_vm_id> <target_uart_id>2</target_uart_id> </legacy_vuart> ... /* VM1 */ <vm_type>POST_STD_VM</vm_type> ... <legacy_vuart id="1"> <type>VUART_LEGACY_PIO</type> <base>COM2_BASE</base> <irq>COM2_IRQ</irq> <target_vm_id>0</target_vm_id> <target_uart_id>1</target_uart_id> </legacy_vuart> ... /* VM2 */ <vm_type>POST_STD_VM</vm_type> ... <legacy_vuart id="1"> <type>VUART_LEGACY_PIO</type> <base>INVALID_COM_BASE</base> <irq>COM2_IRQ</irq> <target_vm_id>0</target_vm_id> <target_uart_id>2</target_uart_id> </legacy_vuart> <legacy_vuart id="2"> <type>VUART_LEGACY_PIO</type> <base>COM2_BASE</base> <irq>COM2_IRQ</irq> <target_vm_id>0</target_vm_id> <target_uart_id>2</target_uart_id> </legacy_vuart> ... /* VM3 */ ...

Note

These vUARTs are emulated in the hypervisor; expose the node as

/dev/ttySn. For the User VM with the lowest VM ID, the communication vUART id should be 1. For other User VMs, the vUART (id is 1) should be configured as invalid; the communication vUART id should be 2 or higher.Build the Lifecycle Manager daemon,

life_mngr:cd acrn-hypervisor make life_mngr

For the Service VM, LaaG VM, and RT-Linux VM, run the Lifecycle Manager daemon:

Copy

life_mngr.conf,s5_trigger_linux.py,life_mngr, andlife_mngr.serviceinto the Service VM and User VMs. These commands assume you have a network connection between the development computer and target. You can also use a USB stick to transfer files.scp build/misc/services/s5_trigger_linux.py root@<target board address>:~/ scp build/misc/services/life_mngr root@<target board address>:/usr/bin/ scp build/misc/services/life_mngr.conf root@<target board address>:/etc/life_mngr/ scp build/misc/services/life_mngr.service root@<target board address>:/lib/systemd/system/

Copy

user_vm_shutdown.pyinto the Service VM.scp misc/services/life_mngr/user_vm_shutdown.py root@<target board address>:~/

Edit options in

/etc/life_mngr/life_mngr.confin the Service VM.VM_TYPE=service_vm VM_NAME=Service_VM DEV_NAME=tty:/dev/ttyS8,/dev/ttyS9,/dev/ttyS10,/dev/ttyS11,/dev/ttyS12,/dev/ttyS13,/dev/ttyS14 ALLOW_TRIGGER_S5=/dev/ttySn

Note

The mapping between User VM ID and communication serial device name (

/dev/ttySn) is in the/etc/serial.conf. If/dev/ttySnis configured in theALLOW_TRIGGER_S5, this means system shutdown is allowed to be triggered in the corresponding User VM.Edit options in

/etc/life_mngr/life_mngr.confin the User VM.VM_TYPE=user_vm VM_NAME=<User VM name> DEV_NAME=tty:/dev/ttyS1 #ALLOW_TRIGGER_S5=/dev/ttySn

Note

The User VM name in this configuration file should be consistent with the VM name in the launch script for the Post-launched User VM or the VM name which is specified in the hypervisor scenario XML for the Pre-launched User VM.

Use the following commands to enable

life_mngr.serviceand restart the Service VM and User VMs.sudo chmod +x /usr/bin/life_mngr sudo systemctl enable life_mngr.service sudo reboot

Note

For the Pre-launched User VM, restart the Lifecycle Manager service manually after the Lifecycle Manager in the Service VM starts.

For the WaaG VM, run the Lifecycle Manager daemon:

Build the

life_mngr_win.exeapplication ands5_trigger_win.py:cd acrn-hypervisor make life_mngr

Note

If there is no

x86_64-w64-mingw32-gcccompiler, you can runsudo apt install gcc-mingw-w64-x86-64on Ubuntu to install it.Copy

s5_trigger_win.pyinto the WaaG VM.Set up a Windows environment:

Download the Python3 from https://www.python.org/downloads/release/python-3810/, install “Python 3.8.10” in WaaG.

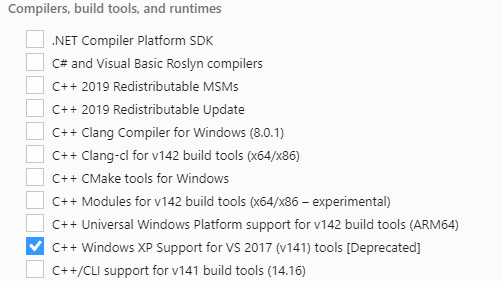

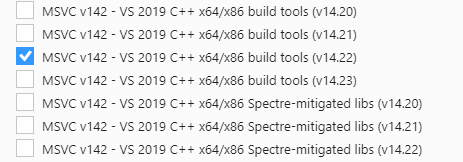

If the Lifecycle Manager for WaaG will be built in Windows, download the Visual Studio 2019 tool from https://visualstudio.microsoft.com/downloads/, and choose the two options in the below screenshots to install “Microsoft Visual C++ Redistributable for Visual Studio 2015, 2017 and 2019 (x86 or X64)” in WaaG:

Note

If the Lifecycle Manager for WaaG is built in Linux, the Visual Studio 2019 tool is not needed for WaaG.

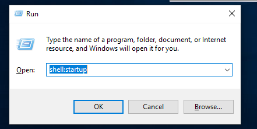

In WaaG, use the Windows + R shortcut key, input

shell:startup, click OK and then copy thelife_mngr_win.exeapplication into this directory.

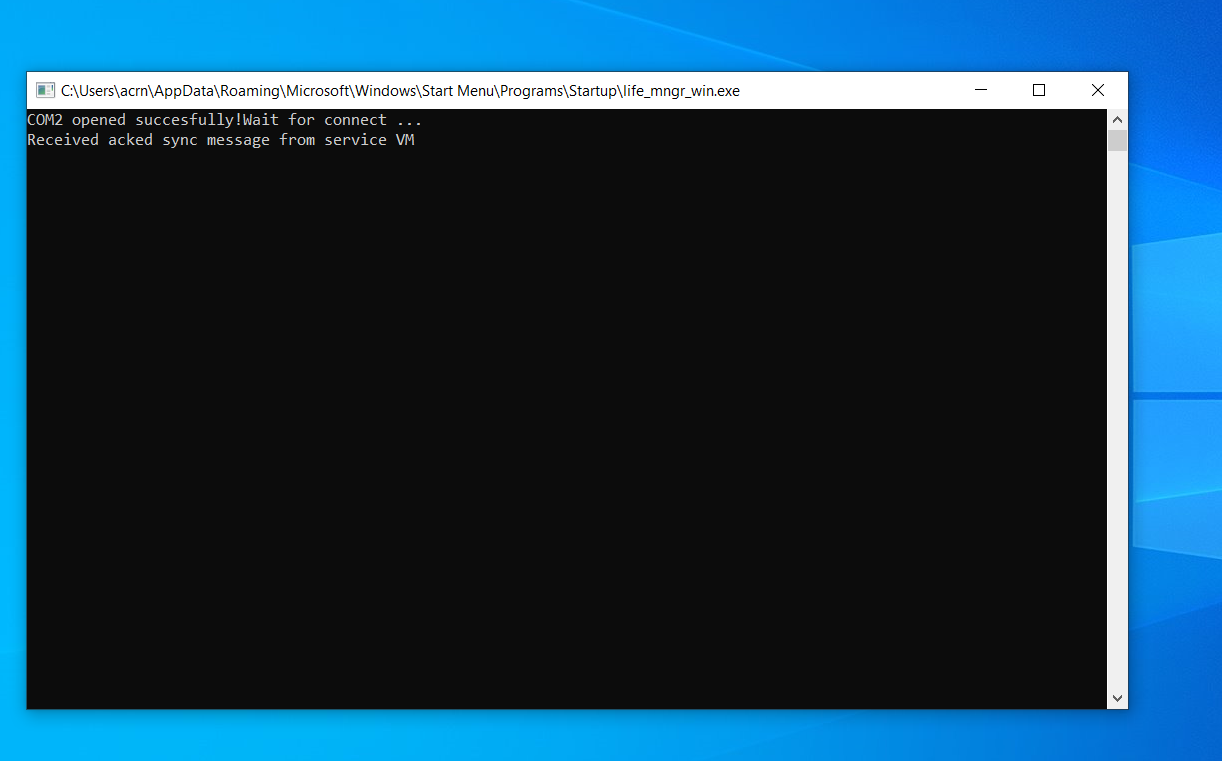

Restart the WaaG VM. The COM2 window will automatically open after reboot.

If

s5_trigger_linux.pyis run in the Service VM, the Service VM shuts down (transitioning to the S5 state) and sends a poweroff request to shut down the User VMs.Note

S5 state is not automatically triggered by a Service VM shutdown; you need to run

s5_trigger_linux.pyin the Service VM.

How to Test¶

As described in Enable vUART Configurations, two vUARTs are defined for a User VM in

pre-defined ACRN scenarios: vUART0/ttyS0 for the console and

vUART1/ttyS1 for S5-related communication (as shown in

S5 Overall Architecture).

For Yocto Project (Poky) or Ubuntu rootfs, the serial-getty

service for ttyS1 conflicts with the S5-related communication

use of vUART1. We can eliminate the conflict by preventing

that service from being started

either automatically or manually, by masking the service

using this command:

systemctl mask serial-getty@ttyS1.service

Refer to the Enable S5 section to set up the S5 environment for the User VMs.

Note

Use the

systemctl status life_mngr.servicecommand to ensure the service is working on the LaaG or RT-Linux:* life_mngr.service - ACRN lifemngr daemon Loaded: loaded (/lib/systemd/system/life_mngr.service; enabled; vendor preset: enabled) Active: active (running) since Thu 2021-11-11 12:43:53 CST; 36s ago Main PID: 197397 (life_mngr)

Note

For WaaG, you need to close

windbgby using thebcdedit /set debug offcommand IF you executed thebcdedit /set debug oncommand when you set up the WaaG, because it occupies theCOM2.Run

user_vm_shutdown.pyin the Service VM to shut down the User VMs:sudo python3 ~/user_vm_shutdown.py <User VM name>

Note

The User VM name is configured in the

life_mngr.confof the User VM. For the WaaG VM, the User VM name is “windows”.Run the

acrnctl listcommand to check the User VM status.sudo acrnctl list

Output example:

<User VM name> stopped

System Shutdown¶

Using a coordinating script, s5_trigger_linux.py or s5_trigger_win.py,

in conjunction with the Lifecycle Manager in each VM, graceful system shutdown

can be performed.

In the hybrid_rt scenario, operator can use the script to send a system

shutdown request via /var/lib/life_mngr/monitor.sock to a User VM that is

configured to be allowed to trigger system S5. This system shutdown request is

forwarded to the Service VM. The Service VM sends a poweroff request to each

User VM (Pre-launched VM or Post-launched VM) through vUART. The Lifecycle

Manager in the User VM receives the poweroff request, sends an ack message, and

proceeds to shut itself down accordingly.

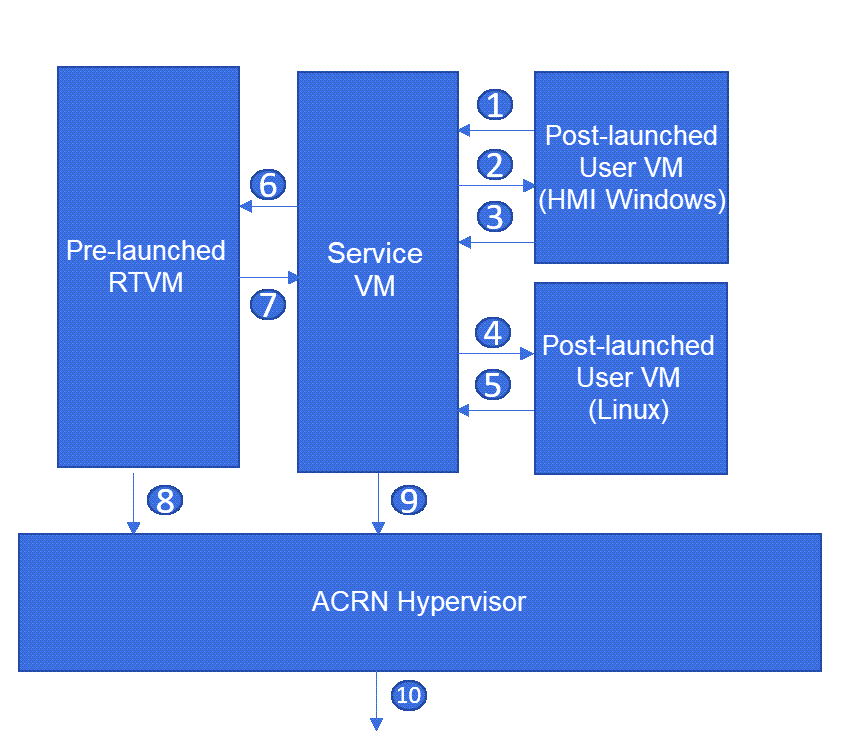

Figure 19 Graceful System Shutdown Flow¶

The HMI in the Windows User VM uses

s5_trigger_win.pyto send a system shutdown request to the Lifecycle Manager. The Lifecycle Manager forwards this request to the Lifecycle Manager in the Service VM.The Lifecycle Manager in the Service VM responds with an ack message and sends a

poweroff_cmdrequest to the Windows User VM.After receiving the

poweroff_cmdrequest, the Lifecycle Manager in the Windows User VM responds with an ack message, then shuts down the VM.The Lifecycle Manager in the Service VM sends a

poweroff_cmdrequest to the Linux User VM.After receiving the

poweroff_cmdrequest, the Lifecycle Manager in the Linux User VM responds with an ack message, then shuts down the VM.The Lifecycle Manager in the Service VM sends a

poweroff_cmdrequest to the Pre-launched RTVM.After receiving the

poweroff_cmdrequest, the Lifecycle Manager in the Pre-launched RTVM responds with an ack message.The Lifecycle Manager in the Pre-launched RTVM shuts down the VM using ACPI PM registers.

After receiving the ack message from all User VMs, the Lifecycle Manager in the Service VM shuts down the VM.

The hypervisor shuts down the system after all VMs have shut down.

Note

If one or more virtual functions (VFs) of a SR-IOV device, e.g., GPU on Alder Lake platform, are assigned to User VMs, take extra steps to disable all VFs before the Service VM shuts down. Otherwise, the Service VM may fail to shut down due to some enabled VFs.