CPU Virtualization¶

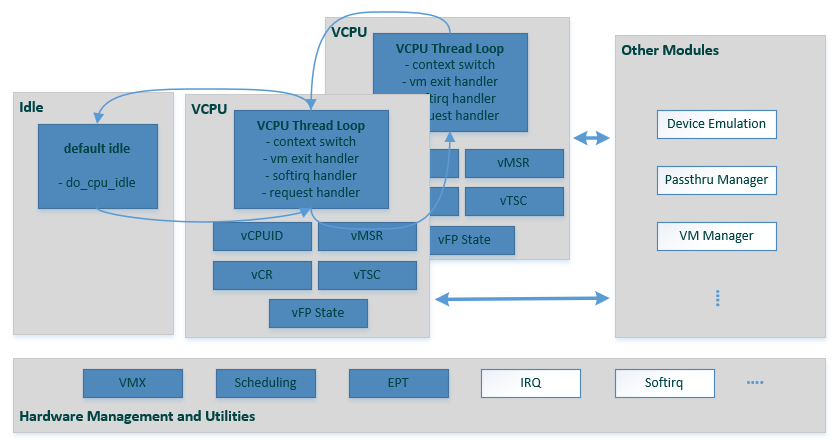

Figure 124 ACRN Hypervisor CPU Virtualization Components

The following sections discuss the major modules (shown in blue) in the CPU virtualization overview shown in Figure 124.

Based on Intel VT-x virtualization technology, ACRN emulates a virtual CPU (vCPU) with the following methods:

core partition: one vCPU is dedicated and associated with one physical CPU (pCPU), making much of hardware register emulation simply pass-through and provides good isolation for physical interrupt and guest execution. (See Static CPU Partitioning for more information.)

core sharing (to be added): two or more vCPUs are sharing one physical CPU (pCPU); a more complicated context switch is needed between different vCPUs’ switching, and provides flexible computing resources sharing for low performance demand vCPU tasks. (See Flexible CPU Sharing for more information.)

simple schedule: a well-designed scheduler framework allows ACRN to adopt different scheduling policy, for example - noop & round-robin:

noop scheduler - only two thread loops are maintained for a CPU - vCPU thread and default idle thread. A CPU runs most of the time in the vCPU thread for emulating a guest CPU, switching between VMX root mode and non-root mode. A CPU schedules out to default idle when an operation needs it to stay in VMX root mode, such as when waiting for an I/O request from DM or ready to destroy.

round-robin scheduler (to be added) - allow more vcpu thread loops running on a CPU. A CPU switches among different vCPU thread and default idle thread, upon running out corresponding timeslice or necessary scheduling out such as waiting for an I/O request. A vCPU could yield itself as well, for example when it executes “PAUSE” instruction.

Static CPU Partitioning¶

CPU partitioning is a policy for mapping a virtual CPU (VCPU) to a physical CPU. To enable this, the ACRN hypervisor could configure “noop scheduler” as the schedule policy for this physical CPU.

ACRN then forces a fixed 1:1 mapping between a VCPU and this physical CPU when creating a VCPU for the guest Operating System. This makes the VCPU management code much simpler.

cpu_affinity_bitmap in vm config helps to decide which physical CPU a

VCPU in a VM affines to, then finalize the fixed mapping. When launching an

user VM, need to choose pCPUs from the VM’s cpu_affinity_bitmap that are not

used by any other VMs.

Flexible CPU Sharing¶

To enable CPU sharing, ACRN hypervisor could configure IORR (IO sensitive Round-Robin) or BVT (Borrowed Virtual Time) scheduler policy.

cpu_affinity_bitmap in vm config helps to decide which physical CPU two

or more vCPUs from different VMs are sharing. A pCPU can be shared among Service OS

and any user VMs as long as local APIC passthrough is not enabled in that user VM.

see Enable CPU Sharing in ACRN for more information.

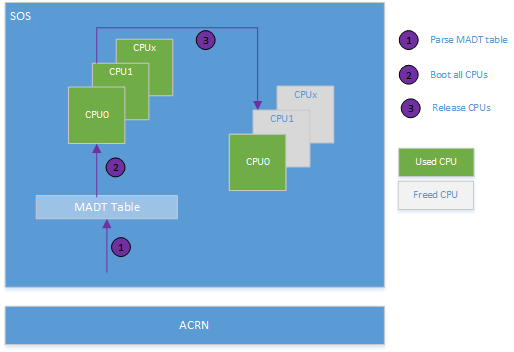

CPU management in the Service VM under static CPU partitioning¶

With ACRN, all ACPI table entries are pass-thru to the Service VM, including the Multiple Interrupt Controller Table (MADT). The Service VM sees all physical CPUs by parsing the MADT when the Service VM kernel boots. All physical CPUs are initially assigned to the Service VM by creating the same number of virtual CPUs.

When the Service VM boot is finished, it releases the physical CPUs intended for UOS use.

Here is an example flow of CPU allocation on a multi-core platform.

CPU management in the Service VM under flexible CPU sharing¶

As all Service VM CPUs could share with different UOSs, ACRN can still pass-thru MADT to Service VM, and the Service VM is still able to see all physical CPUs.

But as under CPU sharing, the Service VM does not need offline/release the physical CPUs intended for UOS use.

CPU management in UOS¶

cpu_affinity_bitmap in vm config defines a set of pCPUs that an User VM

is allowed to run on. acrn-dm could choose to launch on only a subset of the pCPUs

or on all pCPUs listed in cpu_affinity_bitmap, but it can’t assign

any pCPU that is not included in it.

CPU assignment management in HV¶

The physical CPU assignment is pre-defined by cpu_affinity_bitmap in

vm config, while post-launched VMs could be launched on pCPUs that are

a subset of it.

Currently, the ACRN hypervisor does not support virtual CPU migration to different physical CPUs. This means no changes to the virtual CPU to physical CPU can happen without first calling offline_vcpu.

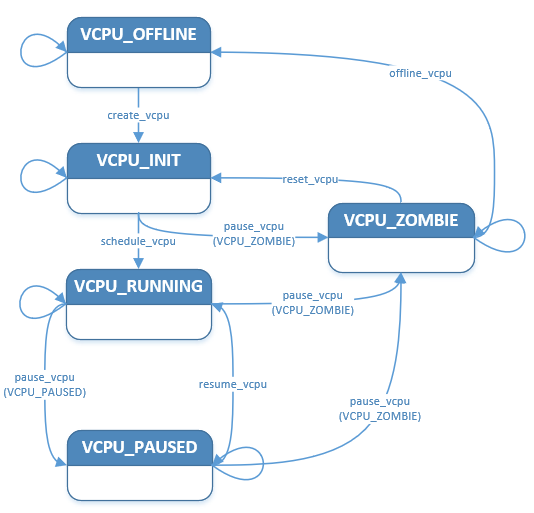

vCPU Lifecycle¶

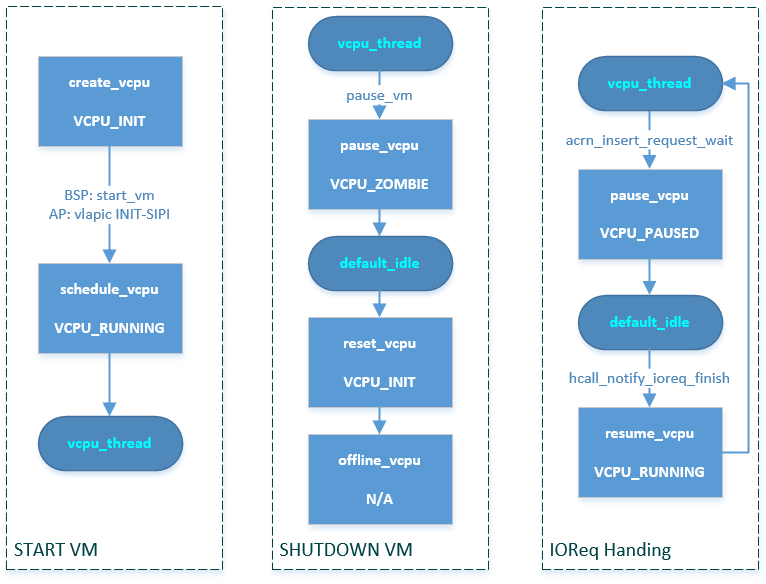

A vCPU lifecycle is shown in Figure 126 below, where the major states are:

- VCPU_INIT: vCPU is in an initialized state, and its vCPU thread is not ready to run on its associated CPU

- VCPU_RUNNING: vCPU is running, and its vCPU thread is ready (in the queue) or running on its associated CPU

- VCPU_PAUSED: vCPU is paused, and its vCPU thread is not running on its associated CPU

- VPCU_ZOMBIE: vCPU is being offline, and its vCPU thread is not running on its associated CPU

- VPCU_OFFLINE: vCPU is offline

Figure 126 ACRN vCPU state transitions

Following functions are used to drive the state machine of the vCPU lifecycle:

-

int32_t

create_vcpu(uint16_t pcpu_id, struct acrn_vm *vm, struct acrn_vcpu **rtn_vcpu_handle)¶ create a vcpu for the target vm

Creates/allocates a vCPU instance, with initialization for its vcpu_id, vpid, vmcs, vlapic, etc. It sets the init vCPU state to VCPU_INIT

- Parameters

pcpu_id: created vcpu will run on this pcpuvm: pointer to vm data structure, this vcpu will owned by this vm.rtn_vcpu_handle: pointer to the created vcpu

- Return Value

0: vcpu created successfully, other values failed.

-

void

pause_vcpu(struct acrn_vcpu *vcpu, enum vcpu_state new_state)¶ pause the vcpu and set new state

Change a vCPU state to VCPU_PAUSED or VCPU_ZOMBIE, and make a reschedule request for it.

- Return

- None

- Parameters

vcpu: pointer to vcpu data structurenew_state: the state to set vcpu

-

int32_t

resume_vcpu(struct acrn_vcpu *vcpu)¶ resume the vcpu

Change a vCPU state to VCPU_RUNNING, and make a reschedule request for it.

- Return

- 0 on success, -1 on failure.

- Parameters

vcpu: pointer to vcpu data structure

-

void

reset_vcpu(struct acrn_vcpu *vcpu, enum reset_mode mode)¶ reset vcpu state and values

Reset all fields in a vCPU instance, the vCPU state is reset to VCPU_INIT.

- Return

- None

- Parameters

vcpu: pointer to vcpu data structuremode: the reset mode

-

void

offline_vcpu(struct acrn_vcpu *vcpu)¶ unmap the vcpu with pcpu and free its vlapic

Unmap the vcpu with pcpu and free its vlapic, and set the vcpu state to offline

- Preconditions

- vcpu != NULL

- Return

- None

- Parameters

vcpu: pointer to vcpu data structure

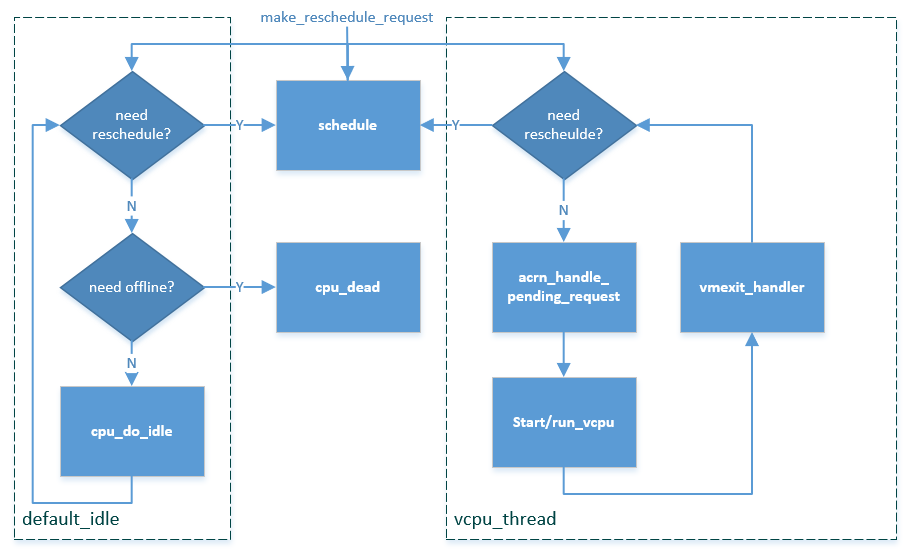

vCPU Scheduling under static CPU partitioning¶

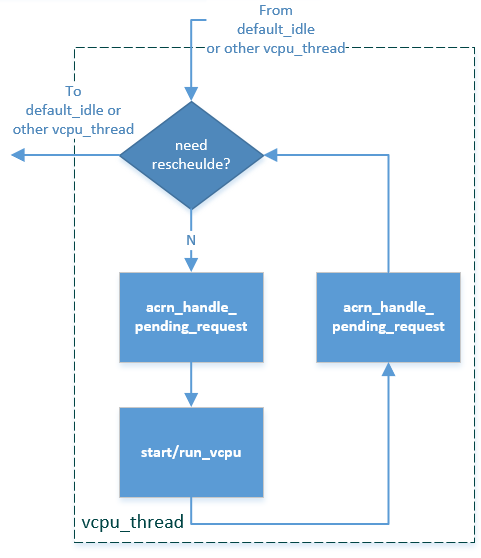

Figure 127 ACRN vCPU scheduling flow under static CPU partitioning

As describes in the CPU virtualization overview, if under static CPU partitioning, ACRN implements a simple scheduling mechanism based on two threads: vcpu_thread and default_idle. A vCPU with VCPU_RUNNING state always runs in a vcpu_thread loop, meanwhile a vCPU with VCPU_PAUSED or VCPU_ZOMBIE state runs in default_idle loop. The detail behaviors in vcpu_thread and default_idle threads are illustrated in Figure 127:

- The vcpu_thread loop will do the loop of handling vm exits, and pending requests around the VM entry/exit. It will also check the reschedule request then schedule out to default_idle if necessary. See vCPU Thread for more details of vcpu_thread.

- The default_idle loop simply does do_cpu_idle while also checking for need-offline and reschedule requests. If a CPU is marked as need-offline, it will go to cpu_dead. If a reschedule request is made for this CPU, it will schedule out to vcpu_thread if necessary.

- The function

make_reschedule_requestdrives the thread switch between vcpu_thread and default_idle.

Some example scenario flows are shown here:

Figure 128 ACRN vCPU scheduling scenarios

- During starting a VM: after create a vCPU, BSP calls launch_vcpu through start_vm, AP calls launch_vcpu through vlapic INIT-SIPI emulation, finally this vCPU runs in a vcpu_thread loop.

- During shutting down a VM: pause_vm function call makes a vCPU running in vcpu_thread to schedule out to default_idle. The following reset_vcpu and offline_vcpu de-init and then offline this vCPU instance.

- During IOReq handling: after an IOReq is sent to DM for emulation, a vCPU running in vcpu_thread schedules out to default_idle through acrn_insert_request_wait->pause_vcpu. After DM complete the emulation for this IOReq, it calls hcall_notify_ioreq_finish->resume_vcpu and makes the vCPU schedule back to vcpu_thread to continue its guest execution.

vCPU Scheduling under flexible CPU sharing¶

To be added.

vCPU Thread¶

The vCPU thread flow is a loop as shown and described below:

Figure 129 ACRN vCPU thread

- Check if vcpu_thread needs to schedule out to default_idle or other vcpu_thread by reschedule request. If needed, then schedule out to default_idle or other vcpu_thread.

- Handle pending request by calling acrn_handle_pending_request. (See Pending Request Handlers.)

- VM Enter by calling start/run_vcpu, then enter non-root mode to do guest execution.

- VM Exit from start/run_vcpu when guest trigger vm exit reason in non-root mode.

- Handle vm exit based on specific reason.

- Loop back to step 1.

vCPU Run Context¶

During a vCPU switch between root and non-root mode, the run context of the vCPU is saved and restored using this structure:

-

struct

run_context¶ registers info saved for vcpu running context

Public Members

-

union run_context::cpu_regs_t

cpu_regs¶

-

uint64_t

cr0¶ The guests CR registers 0, 2, 3 and 4.

-

uint64_t

cr2¶

-

uint64_t

cr4¶

-

uint64_t

rip¶

-

uint64_t

rflags¶

-

uint64_t

ia32_spec_ctrl¶

-

uint64_t

ia32_efer¶

-

union

cpu_regs_t¶

-

union run_context::cpu_regs_t

The vCPU handles runtime context saving by three different categories:

- Always save/restore during vm exit/entry:

- These registers must be saved every time vm exit, and restored every time vm entry

- Registers include: general purpose registers, CR2, and IA32_SPEC_CTRL

- Definition in vcpu->run_context

- Get/Set them through vcpu_get/set_xxx

- On-demand cache/update during vm exit/entry:

- These registers are used frequently. They should be cached from VMCS on first time access after a VM exit, and updated to VMCS on VM entry if marked dirty

- Registers include: RSP, RIP, EFER, RFLAGS, CR0, and CR4

- Definition in vcpu->run_context

- Get/Set them through vcpu_get/set_xxx

- Always read/write from/to VMCS:

- These registers are rarely used. Access to them is always from/to VMCS.

- Registers are in VMCS but not list in the two cases above.

- No definition in vcpu->run_context

- Get/Set them through VMCS API

For the first two categories above, ACRN provides these get/set APIs:

-

uint64_t

vcpu_get_gpreg(const struct acrn_vcpu *vcpu, uint32_t reg)¶ get vcpu register value

Get target vCPU’s general purpose registers value in run_context.

- Return

- the value of the register.

- Parameters

vcpu: pointer to vcpu data structurereg: register of the vcpu

-

void

vcpu_set_gpreg(struct acrn_vcpu *vcpu, uint32_t reg, uint64_t val)¶ set vcpu register value

Set target vCPU’s general purpose registers value in run_context.

- Return

- None

- Parameters

vcpu: pointer to vcpu data structurereg: register of the vcpuval: the value set the register of the vcpu

-

uint64_t

vcpu_get_rip(struct acrn_vcpu *vcpu)¶ get vcpu RIP value

Get & cache target vCPU’s RIP in run_context.

- Return

- the value of RIP.

- Parameters

vcpu: pointer to vcpu data structure

-

void

vcpu_set_rip(struct acrn_vcpu *vcpu, uint64_t val)¶ set vcpu RIP value

Update target vCPU’s RIP in run_context.

- Return

- None

- Parameters

vcpu: pointer to vcpu data structureval: the value set RIP

-

uint64_t

vcpu_get_rsp(const struct acrn_vcpu *vcpu)¶ get vcpu RSP value

Get & cache target vCPU’s RSP in run_context.

- Return

- the value of RSP.

- Parameters

vcpu: pointer to vcpu data structure

-

void

vcpu_set_rsp(struct acrn_vcpu *vcpu, uint64_t val)¶ set vcpu RSP value

Update target vCPU’s RSP in run_context.

- Return

- None

- Parameters

vcpu: pointer to vcpu data structureval: the value set RSP

-

uint64_t

vcpu_get_efer(struct acrn_vcpu *vcpu)¶ get vcpu EFER value

Get & cache target vCPU’s EFER in run_context.

- Return

- the value of EFER.

- Parameters

vcpu: pointer to vcpu data structure

-

void

vcpu_set_efer(struct acrn_vcpu *vcpu, uint64_t val)¶ set vcpu EFER value

Update target vCPU’s EFER in run_context.

- Return

- None

- Parameters

vcpu: pointer to vcpu data structureval: the value set EFER

-

uint64_t

vcpu_get_rflags(struct acrn_vcpu *vcpu)¶ get vcpu RFLAG value

Get & cache target vCPU’s RFLAGS in run_context.

- Return

- the value of RFLAGS.

- Parameters

vcpu: pointer to vcpu data structure

-

void

vcpu_set_rflags(struct acrn_vcpu *vcpu, uint64_t val)¶ set vcpu RFLAGS value

Update target vCPU’s RFLAGS in run_context.

- Return

- None

- Parameters

vcpu: pointer to vcpu data structureval: the value set RFLAGS

-

uint64_t

vcpu_get_cr0(struct acrn_vcpu *vcpu)¶ get vcpu CR0 value

Get & cache target vCPU’s CR0 in run_context.

- Return

- the value of CR0.

- Parameters

vcpu: pointer to vcpu data structure

-

void

vcpu_set_cr0(struct acrn_vcpu *vcpu, uint64_t val)¶ set vcpu CR0 value

Update target vCPU’s CR0 in run_context.

- Parameters

vcpu: pointer to vcpu data structureval: the value set CR0

-

uint64_t

vcpu_get_cr2(const struct acrn_vcpu *vcpu)¶ get vcpu CR2 value

Get & cache target vCPU’s CR2 in run_context.

- Return

- the value of CR2.

- Parameters

vcpu: pointer to vcpu data structure

-

void

vcpu_set_cr2(struct acrn_vcpu *vcpu, uint64_t val)¶ set vcpu CR2 value

Update target vCPU’s CR2 in run_context.

- Parameters

vcpu: pointer to vcpu data structureval: the value set CR2

-

uint64_t

vcpu_get_cr4(struct acrn_vcpu *vcpu)¶ get vcpu CR4 value

Get & cache target vCPU’s CR4 in run_context.

- Return

- the value of CR4.

- Parameters

vcpu: pointer to vcpu data structure

-

void

vcpu_set_cr4(struct acrn_vcpu *vcpu, uint64_t val)¶ set vcpu CR4 value

Update target vCPU’s CR4 in run_context.

- Parameters

vcpu: pointer to vcpu data structureval: the value set CR4

VM Exit Handlers¶

ACRN implements its VM exit handlers with a static table. Except for the exit reasons listed below, a default unhandled_vmexit_handler is used that will trigger an error message and return without handling:

| VM Exit Reason | Handler | Desc |

|---|---|---|

| VMX_EXIT_REASON_EXCEPTION_OR_NMI | exception_vmexit_handler | Only trap #MC, print error then inject back to guest |

| VMX_EXIT_REASON_EXTERNAL_INTERRUPT | external_interrupt_vmexit_handler | External interrupt handler for physical interrupt happening in non-root mode |

| VMX_EXIT_REASON_TRIPLE_FAULT | triple_fault_vmexit_handler | Handle triple fault from vcpu |

| VMX_EXIT_REASON_INIT_SIGNAL | init_signal_vmexit_handler | Handle INIT signal from vcpu |

| VMX_EXIT_REASON_INTERRUPT_WINDOW | interrupt_window_vmexit_handler | To support interrupt window if VID is disabled |

| VMX_EXIT_REASON_CPUID | cpuid_vmexit_handler | Handle CPUID access from guest |

| VMX_EXIT_REASON_VMCALL | vmcall_vmexit_handler | Handle hypercall from guest |

| VMX_EXIT_REASON_CR_ACCESS | cr_access_vmexit_handler | Handle CR registers access from guest |

| VMX_EXIT_REASON_IO_INSTRUCTION | pio_instr_vmexit_handler | Emulate I/O access with range in IO_BITMAP, which may have a handler in hypervisor (such as vuart or vpic), or need to create an I/O request to DM |

| VMX_EXIT_REASON_RDMSR | rdmsr_vmexit_handler | Read MSR from guest in MSR_BITMAP |

| VMX_EXIT_REASON_WRMSR | wrmsr_vmexit_handler | Write MSR from guest in MSR_BITMAP |

| VMX_EXIT_REASON_APIC_ACCESS | apic_access_vmexit_handler | APIC access for APICv |

| VMX_EXIT_REASON_VIRTUALIZED_EOI | veoi_vmexit_handler | Trap vLAPIC EOI for specific vector with level trigger mode in vIOAPIC, required for supporting PTdev |

| VMX_EXIT_REASON_EPT_VIOLATION | ept_violation_vmexit_handler | MMIO emulation, which may have handler in hypervisor (such as vLAPIC or vIOAPIC), or need to create an I/O request to DM |

| VMX_EXIT_REASON_XSETBV | xsetbv_vmexit_handler | Set host owned XCR0 for supporting xsave |

| VMX_EXIT_REASON_APIC_WRITE | apic_write_vmexit_handler | APIC write for APICv |

Details of each vm exit reason handler are described in other sections.

Pending Request Handlers¶

ACRN uses the function acrn_handle_pending_request to handle requests before VM entry in vcpu_thread.

A bitmap in the vCPU structure lists the different requests:

#define ACRN_REQUEST_EXCP 0U

#define ACRN_REQUEST_EVENT 1U

#define ACRN_REQUEST_EXTINT 2U

#define ACRN_REQUEST_NMI 3U

#define ACRN_REQUEST_EOI_EXIT_BITMAP_UPDATE 4U

#define ACRN_REQUEST_EPT_FLUSH 5U

#define ACRN_REQUEST_TRP_FAULT 6U

#define ACRN_REQUEST_VPID_FLUSH 7U /* flush vpid tlb */

ACRN provides the function vcpu_make_request to make different requests, set the bitmap of the corresponding request, and notify the target vCPU through the IPI if necessary (when the target vCPU is not currently running). See vCPU Request for Interrupt Injection for details.

void vcpu_make_request(struct vcpu *vcpu, uint16_t eventid)

{

uint16_t pcpu_id = pcpuid_from_vcpu(vcpu);

bitmap_set_lock(eventid, &vcpu->arch_vcpu.pending_req);

/*

* if current hostcpu is not the target vcpu's hostcpu, we need

* to invoke IPI to wake up target vcpu

*

* TODO: Here we just compare with cpuid, since cpuid currently is

* global under pCPU / vCPU 1:1 mapping. If later we enabled vcpu

* scheduling, we need change here to determine it target vcpu is

* VMX non-root or root mode

*/

if (get_cpu_id() != pcpu_id) {

send_single_ipi(pcpu_id, VECTOR_NOTIFY_VCPU);

}

}

For each request, function acrn_handle_pending_request handles each request as shown below.

| Request | Desc | Request Maker | Request Handler |

|---|---|---|---|

| ACRN_REQUEST_EXCP | Request for exception injection | vcpu_inject_gp, vcpu_inject_pf, vcpu_inject_ud, vcpu_inject_ac, or vcpu_inject_ss and then queue corresponding exception by vcpu_queue_exception | vcpu_inject_hi_exception, vcpu_inject_lo_exception based on exception priority |

| ACRN_REQUEST_EVENT | Request for vlapic interrupt vector injection | vlapic_fire_lvt or vlapic_set_intr, which could be triggered by vlapic lvt, vioapic, or vmsi | vcpu_do_pending_event |

| ACRN_REQUEST_EXTINT | Request for extint vector injection | vcpu_inject_extint, triggered by vpic | vcpu_do_pending_extint |

| ACRN_REQUEST_NMI | Request for nmi injection | vcpu_inject_nmi | program VMX_ENTRY_INT_INFO_FIELD directly |

| ACRN_REQUEST_EOI_EXIT_BITMAP_UPDATE | Request for update VEOI bitmap update for level triggered vector | vlapic_reset_tmr or vlapic_set_tmr change trigger mode in RTC | vcpu_set_vmcs_eoi_exit |

| ACRN_REQUEST_EPT_FLUSH | Request for EPT flush | ept_add_mr, ept_modify_mr, ept_del_mr, or vmx_write_cr0 disable cache | invept |

| ACRN_REQUEST_TRP_FAULT | Request for handling triple fault | vcpu_queue_exception meet triple fault | fatal error |

| ACRN_REQUEST_VPID_FLUSH | Request for VPID flush | None | flush_vpid_single |

Note

Refer to the interrupt management chapter for request handling order for exception, nmi, and interrupts. For other requests such as tmr update, or EPT flush, there is no mandatory order.

VMX Initialization¶

ACRN will attempt to initialize the vCPU’s VMCS before its first launch with the host state, execution control, guest state, entry control and exit control, as shown in the table below.

The table briefly shows how each field got configured. The guest state field is critical for a guest CPU start to run based on different CPU modes.

For a guest vCPU’s state initialization:

- If it’s BSP, the guest state configuration is done in SW load,

which could be initialized by different objects:

- The Service VM BSP: hypervisor will do context initialization in different SW load based on different boot mode

- UOS BSP: DM context initialization through hypercall

- If it’s AP, then it will always start from real mode, and the start

- vector will always come from vlapic INIT-SIPI emulation.

-

struct

acrn_vcpu_regs registers info for vcpu.

Public Members

-

struct acrn_gp_regs

gprs

-

struct acrn_descriptor_ptr

gdt

-

struct acrn_descriptor_ptr

idt

-

uint64_t

rip

-

uint64_t

cs_base

-

uint64_t

cr0

-

uint64_t

cr4

-

uint64_t

cr3

-

uint64_t

ia32_efer

-

uint64_t

rflags

-

uint64_t

reserved_64[4]

-

uint32_t

cs_ar

-

uint32_t

cs_limit

-

uint32_t

reserved_32[3]

-

uint16_t

cs_sel

-

uint16_t

ss_sel

-

uint16_t

ds_sel

-

uint16_t

es_sel

-

uint16_t

fs_sel

-

uint16_t

gs_sel

-

uint16_t

ldt_sel

-

uint16_t

tr_sel

-

uint16_t

reserved_16[4]

-

struct acrn_gp_regs

| VMX Domain | Fields | Bits | Description |

|---|---|---|---|

| host state | CS, DS, ES, FS, GS, TR, LDTR, GDTR, IDTR | n/a | According to host |

| MSR_IA32_PAT, MSR_IA32_EFER | n/a | According to host | |

| CR0, CR3, CR4 | n/a | According to host | |

| RIP | n/a | Set to vm_exit pointer | |

| IA32_SYSENTER_CS/ESP/EIP | n/a | Set to 0 | |

| exec control | VMX_PIN_VM_EXEC_CONTROLS | 0 | Enable external-interrupt exiting |

| 7 | Enable posted interrupts | ||

| VMX_PROC_VM_EXEC_CONTROLS | 3 | Use TSC offsetting | |

| 21 | Use TPR shadow | ||

| 25 | Use I/O bitmaps | ||

| 28 | Use MSR bitmaps | ||

| 31 | Activate secondary controls | ||

| VMX_PROC_VM_EXEC_CONTROLS2 | 0 | Virtualize APIC accesses | |

| 1 | Enable EPT | ||

| 3 | Enable RDTSCP | ||

| 5 | Enable VPID | ||

| 7 | Unrestricted guest | ||

| 8 | APIC-register virtualization | ||

| 9 | Virtual-interrupt delivery | ||

| 20 | Enable XSAVES/XRSTORS | ||

| guest state | CS, DS, ES, FS, GS, TR, LDTR, GDTR, IDTR | n/a | According to vCPU mode and init_ctx |

| RIP, RSP | n/a | According to vCPU mode and init_ctx | |

| CR0, CR3, CR4 | n/a | According to vCPU mode and init_ctx | |

| GUEST_IA32_SYSENTER_CS/ESP/EIP | n/a | Set to 0 | |

| GUEST_IA32_PAT | n/a | Set to PAT_POWER_ON_VALUE | |

| entry control | VMX_ENTRY_CONTROLS | 2 | Load debug controls |

| 14 | Load IA32_PAT | ||

| 15 | Load IA23_EFER | ||

| exit control | VMX_EXIT_CONTROLS | 2 | Save debug controls |

| 9 | Host address space size | ||

| 15 | Acknowledge Interrupt on exit | ||

| 18 | Save IA32_PAT | ||

| 19 | Load IA32_PAT | ||

| 20 | Save IA32_EFER | ||

| 21 | Load IA32_EFER |

CPUID Virtualization¶

CPUID access from guest would cause VM exits unconditionally if executed as a VMX non-root operation. ACRN must return the emulated processor identification and feature information in the EAX, EBX, ECX, and EDX registers.

To simplify, ACRN returns the same values from the physical CPU for most of the CPUID, and specially handle a few CPUID features which are APIC ID related such as CPUID.01H.

ACRN emulates some extra CPUID features for the hypervisor as well.

There is a per-vm vcpuid_entries array, initialized during VM creation and used to cache most of the CPUID entries for each VM. During guest CPUID emulation, ACRN will read the cached value from this array, except some APIC ID-related CPUID data emulated at runtime.

This table describes details for CPUID emulation:

| CPUID | Emulation Description |

|---|---|

| 01H |

|

| 0BH |

|

| 0DH |

|

| 07H |

|

| 16H |

|

| 40000000H |

|

| 40000010H |

|

| 0AH |

|

| 0FH, 10H |

|

| 12H |

|

| 14H |

|

| Others |

|

Note

ACRN needs to take care of some CPUID values that can change at runtime, for example, XD feature in CPUID.80000001H may be cleared by MISC_ENABLE MSR.

MSR Virtualization¶

ACRN always enables MSR bitmap in VMX_PROC_VM_EXEC_CONTROLS VMX execution control field. This bitmap marks the MSRs to cause a VM exit upon guest access for both read and write. The VM exit reason for reading or writing these MSRs is respectively VMX_EXIT_REASON_RDMSR or VMX_EXIT_REASON_WRMSR and the vm exit handler is rdmsr_vmexit_handler or wrmsr_vmexit_handler.

This table shows the predefined MSRs ACRN will trap for all the guests. For the MSRs whose bitmap are not set in the MSR bitmap, guest access will be pass-through directly:

| MSR | Description | Handler |

|---|---|---|

| MSR_IA32_TSC_ADJUST | TSC adjustment of local APIC’s TSC deadline mode | emulates with vlapic |

| MSR_IA32_TSC_DEADLINE | TSC target of local APIC’s TSC deadline mode | emulates with vlapic |

| MSR_IA32_BIOS_UPDT_TRIG | BIOS update trigger | work for update microcode from the Service VM, the signature ID read is from physical MSR, and a BIOS update trigger from the Service VM will trigger a physical microcode update. |

| MSR_IA32_BIOS_SIGN_ID | BIOS update signature ID | “ |

| MSR_IA32_TIME_STAMP_COUNTER | Time-stamp counter | work with VMX_TSC_OFFSET_FULL to emulate virtual TSC |

| MSR_IA32_APIC_BASE | APIC base address | emulates with vlapic |

| MSR_IA32_PAT | Page-attribute table | save/restore in vCPU, write to VMX_GUEST_IA32_PAT_FULL if cr0.cd is 0 |

| MSR_IA32_PERF_CTL | Performance control | Trigger real p-state change if p-state is valid when writing, fetch physical MSR when reading |

| MSR_IA32_FEATURE_CONTROL | Feature control bits that configure operation of VMX and SMX | disabled, locked |

| MSR_IA32_MCG_CAP/STATUS | Machine-Check global control/status | emulates with vMCE |

| MSR_IA32_MISC_ENABLE | Miscellaneous feature control | readonly, except MONITOR/MWAIT enable bit |

| MSR_IA32_SGXLEPUBKEYHASH0/1/2/3 | SHA256 digest of the authorized launch enclaves | emulates with vSGX |

| MSR_IA32_SGX_SVN_STATUS | Status and SVN threshold of SGX support for ACM | readonly, emulates with vSGX |

| MSR_IA32_MTRR_CAP | Memory type range register related | Handled by MTRR emulation. |

| MSR_IA32_MTRR_DEF_TYPE | “ | “ |

| MSR_IA32_MTRR_PHYSBASE_0~9 | “ | “ |

| MSR_IA32_MTRR_FIX64K_00000 | “ | “ |

| MSR_IA32_MTRR_FIX16K_80000/A0000 | “ | “ |

| MSR_IA32_MTRR_FIX4K_C0000~F8000 | “ | “ |

| MSR_IA32_X2APIC_* | x2APIC related MSRs (offset from 0x800 to 0x900) | emulates with vlapic |

| MSR_IA32_L2_MASK_BASE~n | L2 CAT mask for CLOSn | disabled for guest access |

| MSR_IA32_L3_MASK_BASE~n | L3 CAT mask for CLOSn | disabled for guest access |

| MSR_IA32_MBA_MASK_BASE~n | MBA delay mask for CLOSn | disabled for guest access |

| MSR_IA32_VMX_BASIC~VMX_TRUE_ENTRY_CTLS | VMX related MSRs | not support, access will inject #GP |

CR Virtualization¶

ACRN emulates mov to cr0, mov to cr4, mov to cr8, and mov

from cr8 through cr_access_vmexit_handler based on

VMX_EXIT_REASON_CR_ACCESS.

Note

Currently mov to cr8 and mov from cr8 are actually

not valid as CR8-load/store exiting bits are set as 0 in

VMX_PROC_VM_EXEC_CONTROLS.

A VM can mov from cr0 and mov from

cr4 without triggering a VM exit. The value read are the read shadows

of the corresponding register in VMCS. The shadows are updated by the

hypervisor on CR writes.

| Operation | Handler |

|---|---|

| mov to cr0 | Based on vCPU set context API: vcpu_set_cr0 -> vmx_write_cr0 |

| mov to cr4 | Based on vCPU set context API: vcpu_set_cr4 ->vmx_write_cr4 |

| mov to cr8 | Based on vlapic tpr API: vlapic_set_cr8->vlapic_set_tpr |

| mov from cr8 | Based on vlapic tpr API: vlapic_get_cr8->vlapic_get_tpr |

For mov to cr0 and mov to cr4, ACRN sets

cr0_host_mask/cr4_host_mask into VMX_CR0_MASK/VMX_CR4_MASK

for the bitmask causing vm exit.

As ACRN always enables unrestricted guest in

VMX_PROC_VM_EXEC_CONTROLS2, CR0.PE and CR0.PG can be

controlled by guest.

| CR0 MASK | Value | Comments |

|---|---|---|

| cr0_always_on_mask | fixed0 & (~(CR0_PE | CR0_PG)) | where fixed0 is gotten from MSR_IA32_VMX_CR0_FIXED0, means these bits are fixed to be 1 under VMX operation |

| cr0_always_off_mask | ~fixed1 | where ~fixed1 is gotten from MSR_IA32_VMX_CR0_FIXED1, means these bits are fixed to be 0 under VMX operation |

| CR0_TRAP_MASK | CR0_PE | CR0_PG | CR0_WP | CR0_CD | CR0_NW | ACRN will also trap PE, PG, WP, CD, and NW bits |

| cr0_host_mask | ~(fixed0 ^ fixed1) | CR0_TRAP_MASK | ACRN will finally trap bits under VMX root mode control plus additionally added bits |

For mov to cr0 emulation, ACRN will handle a paging mode change based on

PG bit change, and a cache mode change based on CD and NW bits changes.

ACRN also takes care of illegal writing from guest to invalid

CR0 bits (for example, set PG while CR4.PAE = 0 and IA32_EFER.LME = 1),

which will finally inject a #GP to guest. Finally,

VMX_CR0_READ_SHADOW will be updated for guest reading of host

controlled bits, and VMX_GUEST_CR0 will be updated for real vmx cr0

setting.

| CR4 MASK | Value | Comments |

|---|---|---|

| cr4_always_on_mask | fixed0 | where fixed0 is gotten from MSR_IA32_VMX_CR4_FIXED0, means these bits are fixed to be 1 under VMX operation |

| cr4_always_off_mask | ~fixed1 | where ~fixed1 is gotten from MSR_IA32_VMX_CR4_FIXED1, means these bits are fixed to be 0 under VMX operation |

| CR4_TRAP_MASK | CR4_PSE | CR4_PAE | CR4_VMXE | CR4_PCIDE | CR4_SMEP | CR4_SMAP | CR4_PKE | ACRN will also trap PSE, PAE, VMXE, and PCIDE bits |

| cr4_host_mask | ~(fixed0 ^ fixed1) | CR4_TRAP_MASK | ACRN will finally trap bits under VMX root mode control plus additionally added bits |

The mov to cr4 emulation is similar to cr0 emulation noted above.

IO/MMIO Emulation¶

ACRN always enables I/O bitmap in VMX_PROC_VM_EXEC_CONTROLS and EPT in VMX_PROC_VM_EXEC_CONTROLS2. Based on them, pio_instr_vmexit_handler and ept_violation_vmexit_handler are used for IO/MMIO emulation for a emulated device. The emulated device could locate in hypervisor or DM in the Service VM. Please refer to the “I/O Emulation” section for more details.

For an emulated device done in the hypervisor, ACRN provide some basic APIs to register its IO/MMIO range:

- For the Service VM, the default I/O bitmap are all set to 0, which means the Service VM will pass through all I/O port access by default. Adding an I/O handler for a hypervisor emulated device needs to first set its corresponding I/O bitmap to 1.

- For UOS, the default I/O bitmap are all set to 1, which means UOS will trap all I/O port access by default. Adding an I/O handler for a hypervisor emulated device does not need change its I/O bitmap. If the trapped I/O port access does not fall into a hypervisor emulated device, it will create an I/O request and pass it to the Service VM DM.

- For the Service VM, EPT maps all range of memory to the Service VM except for ACRN hypervisor area. This means the Service VM will pass through all MMIO access by default. Adding a MMIO handler for a hypervisor emulated device needs to first remove its MMIO range from EPT mapping.

- For UOS, EPT only maps its system RAM to the UOS, which means UOS will trap all MMIO access by default. Adding a MMIO handler for a hypervisor emulated device does not need to change its EPT mapping. If the trapped MMIO access does not fall into a hypervisor emulated device, it will create an I/O request and pass it to the Service VM DM.

| API | Description |

|---|---|

| register_pio_emulation_handler | register an I/O emulation handler for a hypervisor emulated device by specific I/O range |

| register_mmio_emulation_handler | register a MMIO emulation handler for a hypervisor emulated device by specific MMIO range |

Instruction Emulation¶

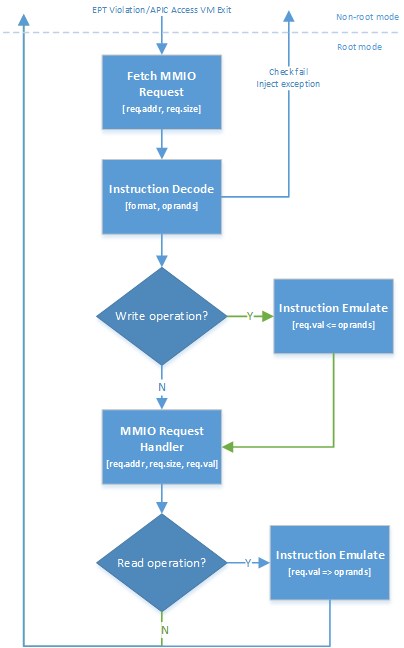

ACRN implements a simple instruction emulation infrastructure for MMIO (EPT) and APIC access emulation. When such a VM exit is triggered, the hypervisor needs to decode the instruction from RIP then attempt the corresponding emulation based on its instruction and read/write direction.

ACRN currently supports emulating instructions for mov, movx,

movs, stos, test, and, or, cmp, sub and

bittest without support for lock prefix. Real mode emulation is not

supported.

Figure 130 Instruction Emulation Work Flow

In the handlers for EPT violation or APIC access VM exit, ACRN will:

- Fetch the MMIO access request’s address and size

- Do decode_instruction for the instruction in current RIP

with the following check:

- Is the instruction supported? If not, inject #UD to guest.

- Is GVA of RIP, dest, and src valid? If not, inject #PF to guest.

- Is stack valid? If not, inject #SS to guest.

- If step 2 succeeds, check the access direction. If it’s a write, then do emulate_instruction to fetch MMIO request’s value from instruction operands.

- Execute MMIO request handler, for EPT violation is emulate_io

while APIC access is vlapic_write/read based on access

direction. It will finally complete this MMIO request emulation

by:

- putting req.val to req.addr for write operation

- getting req.val from req.addr for read operation

- If the access direction is read, then do emulate_instruction to put MMIO request’s value into instruction operands.

- Return to guest.

TSC Emulation¶

Guest vCPU execution of RDTSC/RDTSCP and access to MSR_IA32_TSC_AUX does not cause a VM Exit to the hypervisor. Hypervisor uses MSR_IA32_TSC_AUX to record CPU ID, thus the CPU ID provided by MSR_IA32_TSC_AUX might be changed via Guest.

RDTSCP is widely used by hypervisor to identify current CPU ID. Due to no VM Exit for MSR_IA32_TSC_AUX MSR register, ACRN hypervisor saves/restores MSR_IA32_TSC_AUX value on every VM Exit/Enter. Before hypervisor restores host CPU ID, rdtscp should not be called as it could get vCPU ID instead of host CPU ID.

The MSR_IA32_TIME_STAMP_COUNTER is emulated by ACRN hypervisor, with a simple implementation based on TSC_OFFSET (enabled in VMX_PROC_VM_EXEC_CONTROLS):

- For read:

val = rdtsc() + exec_vmread64(VMX_TSC_OFFSET_FULL) - For write:

exec_vmwrite64(VMX_TSC_OFFSET_FULL, val - rdtsc())

ART Virtualization¶

The invariant TSC is based on the invariant timekeeping hardware (called Always Running Timer or ART), that runs at the core crystal clock frequency. The ratio defined by the CPUID leaf 15H expresses the frequency relationship between the ART hardware and the TSC.

If CPUID.15H.EBX[31:0] != 0 and CPUID.80000007H:EDX[InvariantTSC] = 1, the following linearity relationship holds between the TSC and the ART hardware:

TSC_Value = (ART_Value * CPUID.15H:EBX[31:0]) / CPUID.15H:EAX[31:0] + K

Where K is an offset that can be adjusted by a privileged agent. When ART hardware is reset, both invariant TSC and K are also reset.

The guideline of ART virtualization (vART) is that software in native can run in VM too. The vART solution is:

- Present the ART capability to guest through CPUID leaf 15H for CPUID.15H:EBX[31:0] and CPUID.15H:EAX[31:0].

- Passthrough devices see the physical ART_Value (vART_Value = pART_Value)

- Relationship between the ART and TSC in guest is:

vTSC_Value = (vART_Value * CPUID.15H:EBX[31:0]) / CPUID.15H:EAX[31:0] + vKWhere vK = K + VMCS.TSC_OFFSET. - If vK or vTSC_Value are changed by guest, we change the VMCS.TSC_OFFSET accordingly.

- K should never be changed by hypervisor.

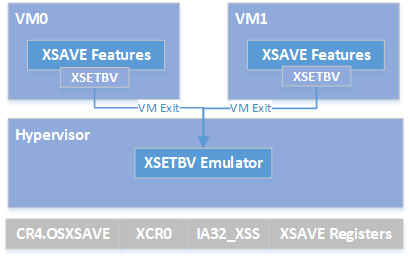

XSAVE Emulation¶

The XSAVE feature set is comprised of eight instructions:

- XGETBV and XSETBV allow software to read and write the extended control register XCR0, which controls the operation of the XSAVE feature set.

- XSAVE, XSAVEOPT, XSAVEC, and XSAVES are four instructions that save processor state to memory.

- XRSTOR and XRSTORS are corresponding instructions that load processor state from memory.

- XGETBV, XSAVE, XSAVEOPT, XSAVEC, and XRSTOR can be executed at any privilege level;

- XSETBV, XSAVES, and XRSTORS can be executed only if CPL = 0.

Enabling the XSAVE feature set is controlled by XCR0 (through XSETBV) and IA32_XSS MSR. Refer to the Intel SDM Volume 1 chapter 13 for more details.

Figure 131 ACRN Hypervisor XSAVE emulation

By default, ACRN enables XSAVES/XRSTORS in VMX_PROC_VM_EXEC_CONTROLS2, so it allows the guest to use the XSAVE feature. Because guest execution of XSETBV will always trigger XSETBV VM exit, ACRN actually needs to take care of XCR0 access.

ACRN emulates XSAVE features through the following rules:

- Enumerate CPUID.01H for native XSAVE feature support

- If yes for step 1, enable XSAVE in hypervisor by CR4.OSXSAVE

- Emulates XSAVE related CPUID.01H & CPUID.0DH to guest

- Emulates XCR0 access through xsetbv_vmexit_handler

- ACRN pass-through the access of IA32_XSS MSR to guest

- ACRN hypervisor does NOT use any feature of XSAVE

- As ACRN emulate vCPU with partition mode, so based on above rules 5 and 6, a guest vCPU will fully control the XSAVE feature in non-root mode.