Virtio devices high-level design¶

The ACRN Hypervisor follows the Virtual I/O Device (virtio) specification to realize I/O virtualization for many performance-critical devices supported in the ACRN project. Adopting the virtio specification lets us reuse many frontend virtio drivers already available in a Linux-based User OS, drastically reducing potential development effort for frontend virtio drivers. To further reduce the development effort of backend virtio drivers, the hypervisor provides the virtio backend service (VBS) APIs, that make it very straightforward to implement a virtio device in the hypervisor.

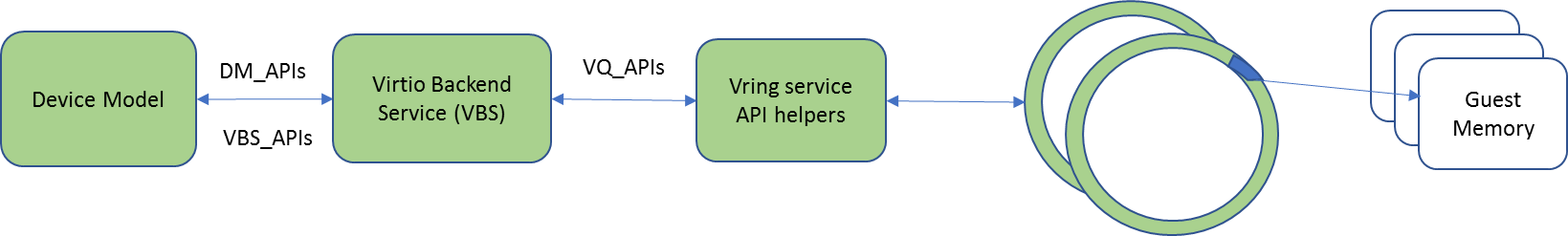

The virtio APIs can be divided into 3 groups: DM APIs, virtio backend service (VBS) APIs, and virtqueue (VQ) APIs, as shown in Figure 114.

- DM APIs are exported by the DM, and are mainly used during the device initialization phase and runtime. The DM APIs also include PCIe emulation APIs because each virtio device is a PCIe device in the SOS and UOS.

- VBS APIs are mainly exported by the VBS and related modules. Generally they are callbacks to be registered into the DM.

- VQ APIs are used by a virtio backend device to access and parse information from the shared memory between the frontend and backend device drivers.

Virtio framework is the para-virtualization specification that ACRN follows to implement I/O virtualization of performance-critical devices such as audio, eAVB/TSN, IPU, and CSMU devices. This section gives an overview about virtio history, motivation, and advantages, and then highlights virtio key concepts. Second, this section will describe ACRN’s virtio architectures, and elaborates on ACRN virtio APIs. Finally this section will introduce all the virtio devices currently supported by ACRN.

Virtio introduction¶

Virtio is an abstraction layer over devices in a para-virtualized hypervisor. Virtio was developed by Rusty Russell when he worked at IBM research to support his lguest hypervisor in 2007, and it quickly became the de facto standard for KVM’s para-virtualized I/O devices.

Virtio is very popular for virtual I/O devices because is provides a straightforward, efficient, standard, and extensible mechanism, and eliminates the need for boutique, per-environment, or per-OS mechanisms. For example, rather than having a variety of device emulation mechanisms, virtio provides a common frontend driver framework that standardizes device interfaces, and increases code reuse across different virtualization platforms.

Given the advantages of virtio, ACRN also follows the virtio specification.

Key Concepts¶

To better understand virtio, especially its usage in ACRN, we’ll highlight several key virtio concepts important to ACRN:

- Frontend virtio driver (FE)

- Virtio adopts a frontend-backend architecture that enables a simple but flexible framework for both frontend and backend virtio drivers. The FE driver merely needs to offer services configure the interface, pass messages, produce requests, and kick backend virtio driver. As a result, the FE driver is easy to implement and the performance overhead of emulating a device is eliminated.

- Backend virtio driver (BE)

Similar to FE driver, the BE driver, running either in user-land or kernel-land of the host OS, consumes requests from the FE driver and sends them to the host native device driver. Once the requests are done by the host native device driver, the BE driver notifies the FE driver that the request is complete.

Note: to distinguish BE driver from host native device driver, the host native device driver is called “native driver” in this document.

- Straightforward: virtio devices as standard devices on existing buses

Instead of creating new device buses from scratch, virtio devices are built on existing buses. This gives a straightforward way for both FE and BE drivers to interact with each other. For example, FE driver could read/write registers of the device, and the virtual device could interrupt FE driver, on behalf of the BE driver, in case something of interest is happening.

Currently virtio supports PCI/PCIe bus and MMIO bus. In ACRN, only PCI/PCIe bus is supported, and all the virtio devices share the same vendor ID 0x1AF4.

Note: For MMIO, the “bus” is a little bit an overstatement since basically it is a few descriptors describing the devices.

- Efficient: batching operation is encouraged

- Batching operation and deferred notification are important to achieve high-performance I/O, since notification between FE and BE driver usually involves an expensive exit of the guest. Therefore batching operating and notification suppression are highly encouraged if possible. This will give an efficient implementation for performance-critical devices.

- Standard: virtqueue

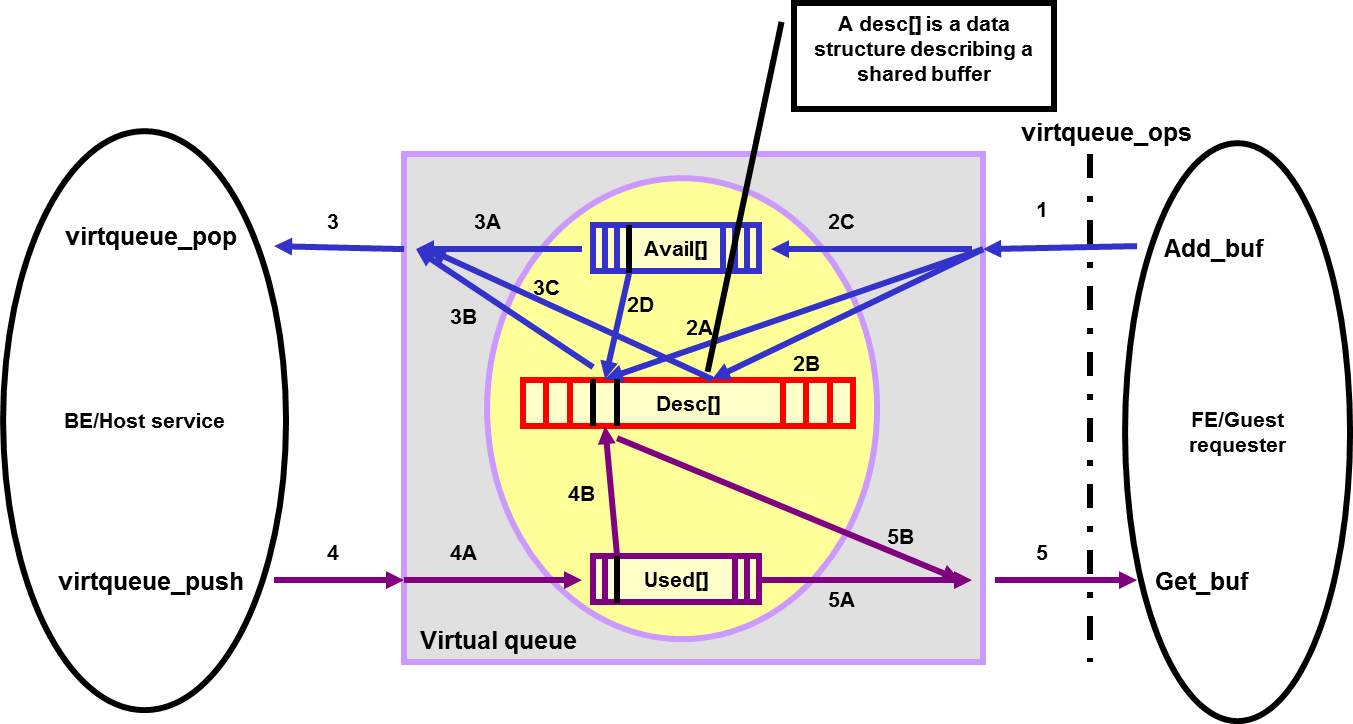

All virtio devices share a standard ring buffer and descriptor mechanism, called a virtqueue, shown in Figure 115. A virtqueue is a queue of scatter-gather buffers. There are three important methods on virtqueues:

- add_buf is for adding a request/response buffer in a virtqueue,

- get_buf is for getting a response/request in a virtqueue, and

- kick is for notifying the other side for a virtqueue to consume buffers.

The virtqueues are created in guest physical memory by the FE drivers. BE drivers only need to parse the virtqueue structures to obtain the requests and process them. How a virtqueue is organized is specific to the Guest OS. In the Linux implementation of virtio, the virtqueue is implemented as a ring buffer structure called vring.

In ACRN, the virtqueue APIs can be leveraged directly so that users don’t need to worry about the details of the virtqueue. (Refer to guest OS for more details about the virtqueue implementation.)

- Extensible: feature bits

- A simple extensible feature negotiation mechanism exists for each virtual device and its driver. Each virtual device could claim its device specific features while the corresponding driver could respond to the device with the subset of features the driver understands. The feature mechanism enables forward and backward compatibility for the virtual device and driver.

- Virtio Device Modes

The virtio specification defines three modes of virtio devices: a legacy mode device, a transitional mode device, and a modern mode device. A legacy mode device is compliant to virtio specification version 0.95, a transitional mode device is compliant to both 0.95 and 1.0 spec versions, and a modern mode device is only compatible to the version 1.0 specification.

In ACRN, all the virtio devices are transitional devices, meaning that they should be compatible with both 0.95 and 1.0 versions of virtio specification.

- Virtio Device Discovery

Virtio devices are commonly implemented as PCI/PCIe devices. A virtio device using virtio over PCI/PCIe bus must expose an interface to the Guest OS that meets the PCI/PCIe specifications.

Conventionally, any PCI device with Vendor ID 0x1AF4, PCI_VENDOR_ID_REDHAT_QUMRANET, and Device ID 0x1000 through 0x107F inclusive is a virtio device. Among the Device IDs, the legacy/transitional mode virtio devices occupy the first 64 IDs ranging from 0x1000 to 0x103F, while the range 0x1040-0x107F belongs to virtio modern devices. In addition, the Subsystem Vendor ID should reflect the PCI/PCIe vendor ID of the environment, and the Subsystem Device ID indicates which virtio device is supported by the device.

Virtio Frameworks¶

This section describes the overall architecture of virtio, and then introduce ACRN specific implementations of the virtio framework.

Architecture¶

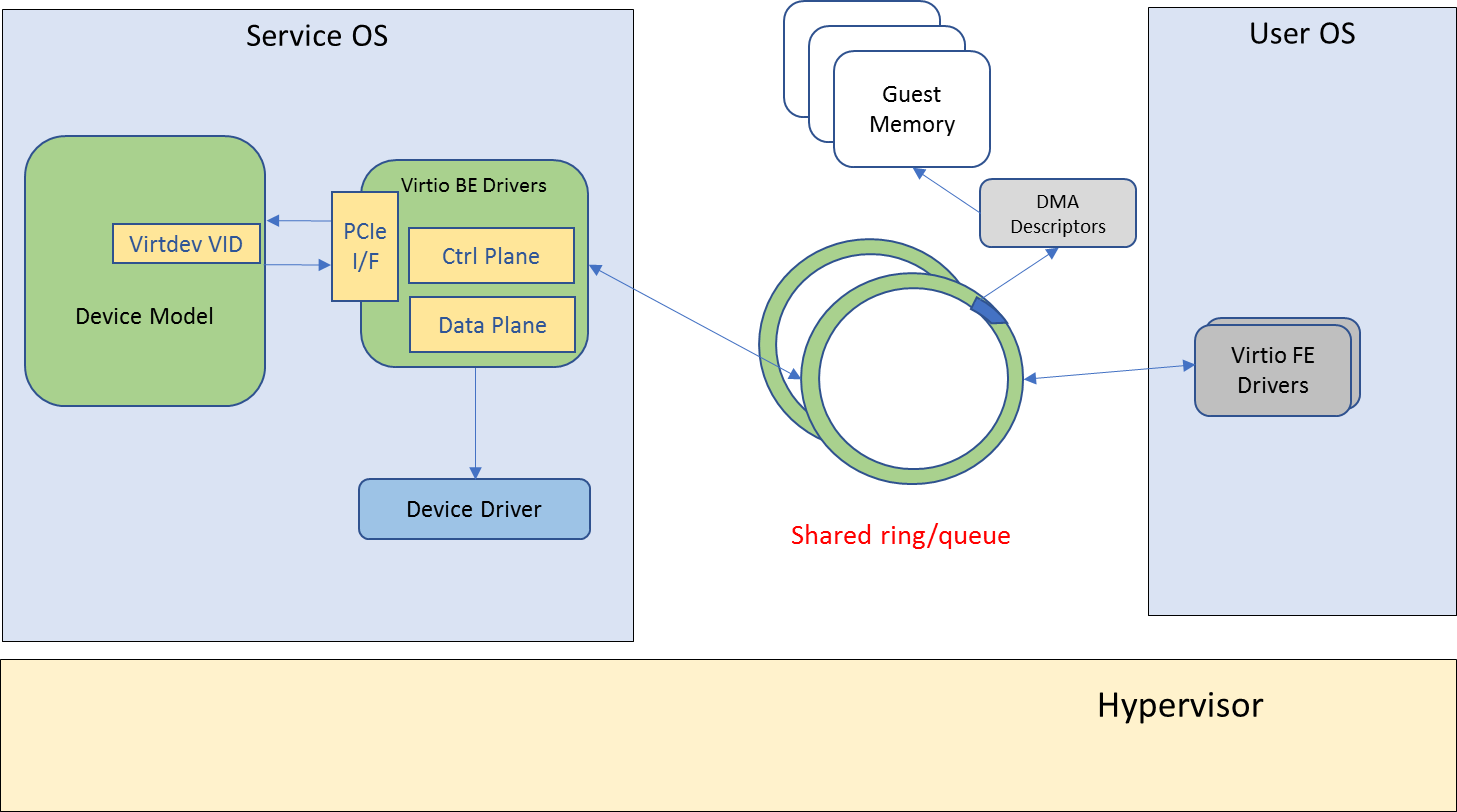

Virtio adopts a frontend-backend architecture, as shown in Figure 116. Basically the FE and BE driver communicate with each other through shared memory, via the virtqueues. The FE driver talks to the BE driver in the same way it would talk to a real PCIe device. The BE driver handles requests from the FE driver, and notifies the FE driver if the request has been processed.

In addition to virtio’s frontend-backend architecture, both FE and BE drivers follow a layered architecture, as shown in Figure 117. Each side has three layers: transports, core models, and device types. All virtio devices share the same virtio infrastructure, including virtqueues, feature mechanisms, configuration space, and buses.

Virtio Framework Considerations¶

How to realize the virtio framework is specific to a hypervisor implementation. In ACRN, the virtio framework implementations can be classified into two types, virtio backend service in user-land (VBS-U) and virtio backend service in kernel-land (VBS-K), according to where the virtio backend service (VBS) is located. Although different in BE drivers, both VBS-U and VBS-K share the same FE drivers. The reason behind the two virtio implementations is to meet the requirement of supporting a large amount of diverse I/O devices in ACRN project.

When developing a virtio BE device driver, the device owner should choose carefully between the VBS-U and VBS-K. Generally VBS-U targets non-performance-critical devices, but enables easy development and debugging. VBS-K targets performance critical devices.

The next two sections introduce ACRN’s two implementations of the virtio framework.

User-Land Virtio Framework¶

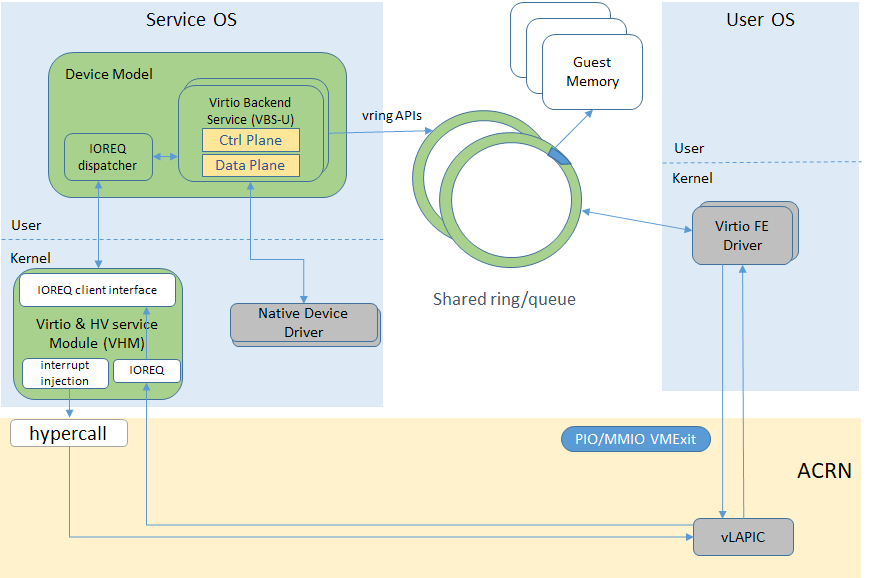

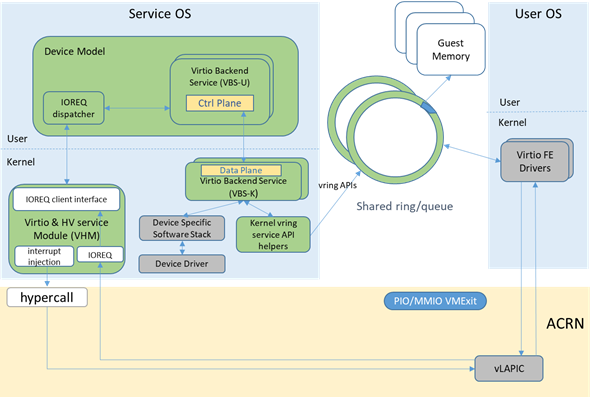

The architecture of ACRN user-land virtio framework (VBS-U) is shown in Figure 118.

The FE driver talks to the BE driver as if it were talking with a PCIe device. This means for “control plane”, the FE driver could poke device registers through PIO or MMIO, and the device will interrupt the FE driver when something happens. For “data plane”, the communication between the FE and BE driver is through shared memory, in the form of virtqueues.

On the service OS side where the BE driver is located, there are several key components in ACRN, including device model (DM), virtio and HV service module (VHM), VBS-U, and user-level vring service API helpers.

DM bridges the FE driver and BE driver since each VBS-U module emulates a PCIe virtio device. VHM bridges DM and the hypervisor by providing remote memory map APIs and notification APIs. VBS-U accesses the virtqueue through the user-level vring service API helpers.

Kernel-Land Virtio Framework¶

ACRN supports two kernel-land virtio frameworks: VBS-K, designed from scratch for ACRN, the other called Vhost, compatible with Linux Vhost.

VBS-K framework¶

The architecture of ACRN VBS-K is shown in Figure 119 below.

Generally VBS-K provides acceleration towards performance critical devices emulated by VBS-U modules by handling the “data plane” of the devices directly in the kernel. When VBS-K is enabled for certain devices, the kernel-land vring service API helpers, instead of the user-land helpers, are used to access the virtqueues shared by the FE driver. Compared to VBS-U, this eliminates the overhead of copying data back-and-forth between user-land and kernel-land within service OS, but pays with the extra implementation complexity of the BE drivers.

Except for the differences mentioned above, VBS-K still relies on VBS-U for feature negotiations between FE and BE drivers. This means the “control plane” of the virtio device still remains in VBS-U. When feature negotiation is done, which is determined by FE driver setting up an indicative flag, VBS-K module will be initialized by VBS-U. Afterwards, all request handling will be offloaded to the VBS-K in kernel.

Finally the FE driver is not aware of how the BE driver is implemented, either in VBS-U or VBS-K. This saves engineering effort regarding FE driver development.

Figure 119 ACRN Kernel Land Virtio Framework

Vhost framework¶

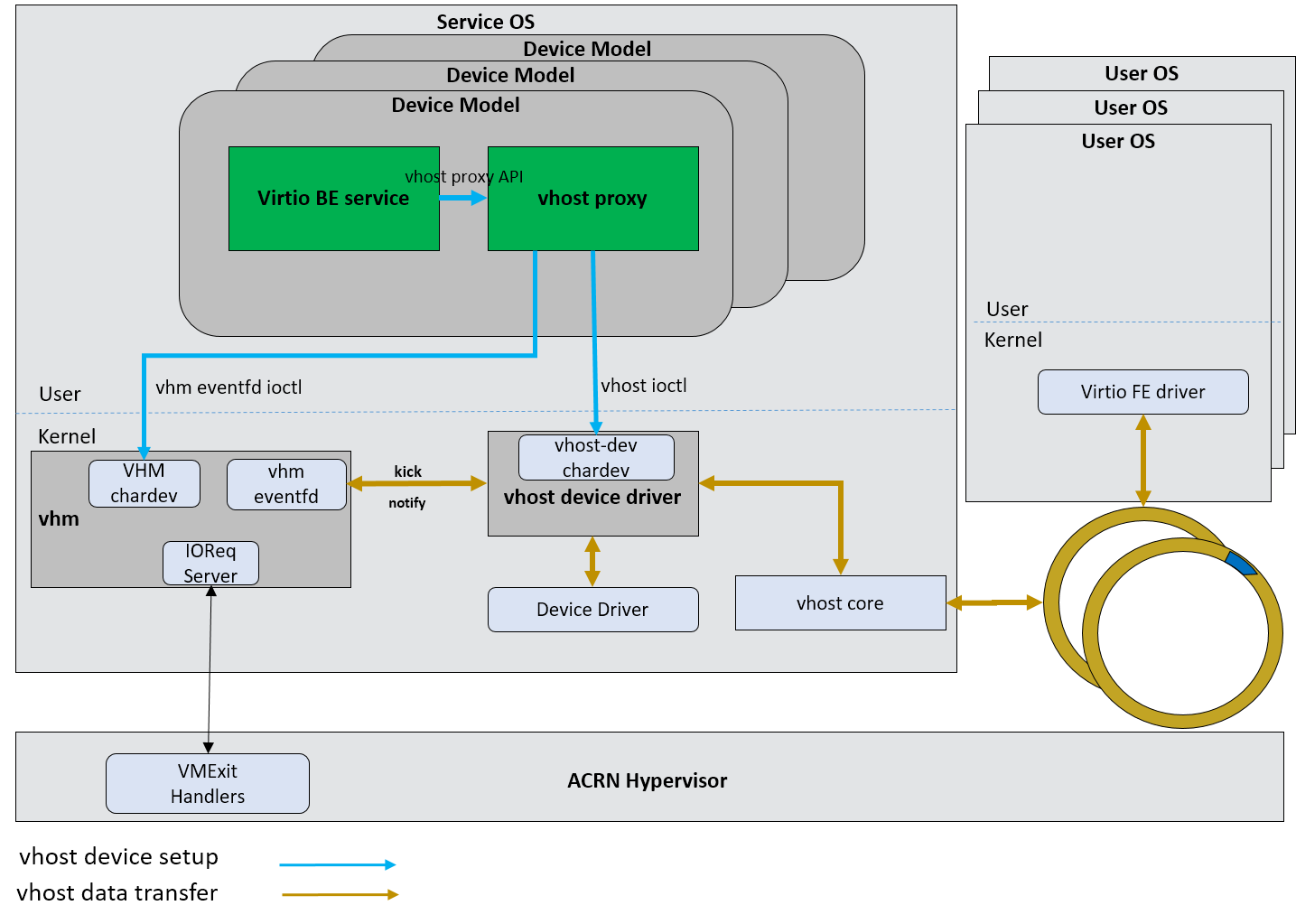

Vhost is similar to VBS-K. Vhost is a common solution upstreamed in the Linux kernel, with several kernel mediators based on it.

Architecture¶

Vhost/virtio is a semi-virtualized device abstraction interface specification that has been widely applied in various virtualization solutions. Vhost is a specific kind of virtio where the data plane is put into host kernel space to reduce the context switch while processing the IO request. It is usually called “virtio” when used as a front-end driver in a guest operating system or “vhost” when used as a back-end driver in a host. Compared with a pure virtio solution on a host, vhost uses the same frontend driver as virtio solution and can achieve better performance. Figure 120 shows the vhost architecture on ACRN.

Figure 120 Vhost Architecture on ACRN

Compared with a userspace virtio solution, vhost decomposes data plane from user space to kernel space. The vhost general data plane workflow can be described as:

- vhost proxy creates two eventfds per virtqueue, one is for kick, (an ioeventfd), the other is for call, (an irqfd).

- vhost proxy registers the two eventfds to VHM through VHM character

device:

- Ioevenftd is bound with a PIO/MMIO range. If it is a PIO, it is registered with (fd, port, len, value). If it is a MMIO, it is registered with (fd, addr, len).

- Irqfd is registered with MSI vector.

- vhost proxy sets the two fds to vhost kernel through ioctl of vhost device.

- vhost starts polling the kick fd and wakes up when guest kicks a virtqueue, which results a event_signal on kick fd by VHM ioeventfd.

- vhost device in kernel signals on the irqfd to notify the guest.

Ioeventfd implementation¶

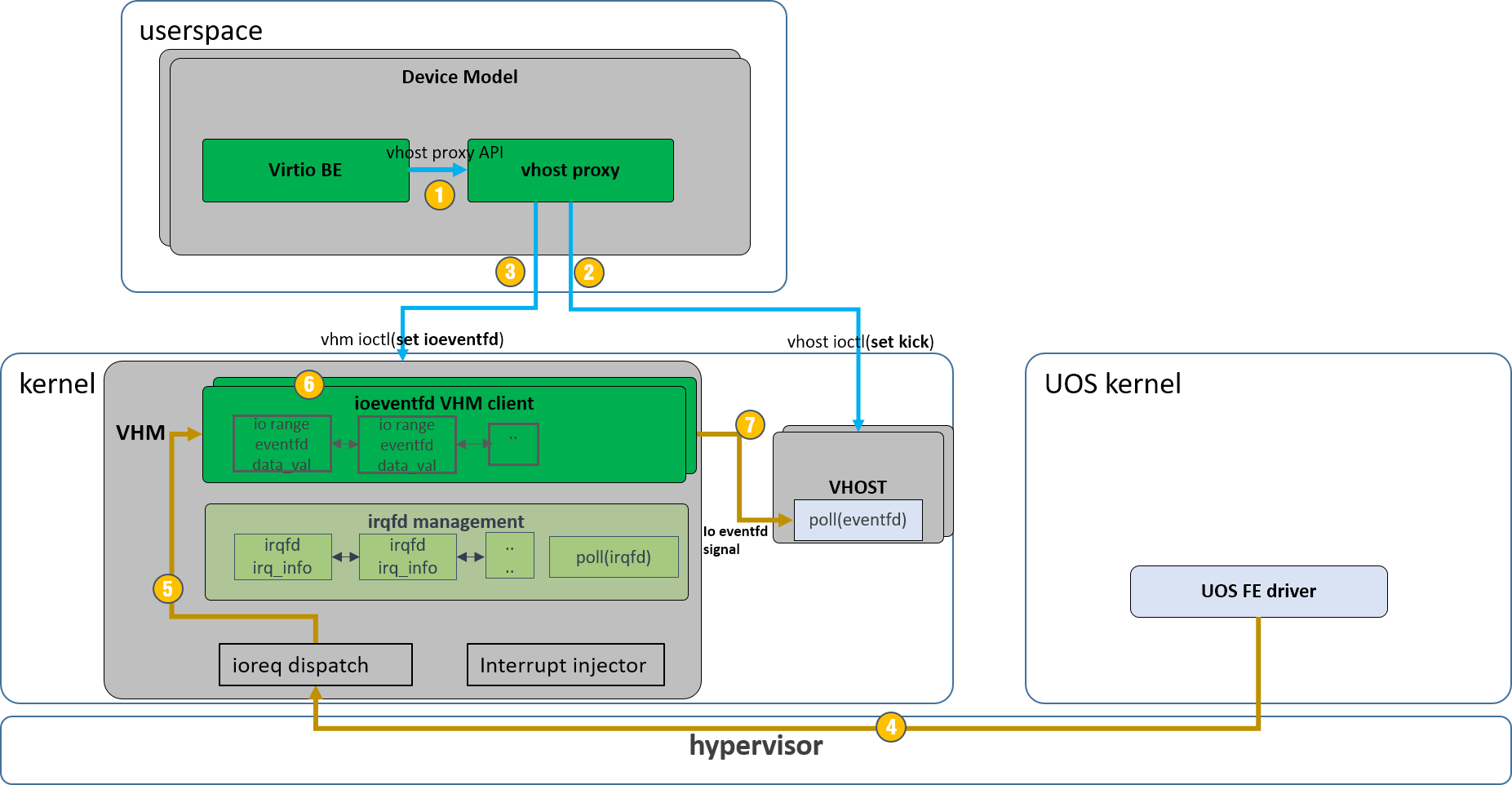

Ioeventfd module is implemented in VHM, and can enhance a registered eventfd to listen to IO requests (PIO/MMIO) from vhm ioreq module and signal the eventfd when needed. Figure 121 shows the general workflow of ioeventfd.

Figure 121 ioeventfd general work flow

The workflow can be summarized as:

- vhost device init. Vhost proxy create two eventfd for ioeventfd and irqfd.

- pass ioeventfd to vhost kernel driver.

- pass ioevent fd to vhm driver

- UOS FE driver triggers ioreq and forwarded to SOS by hypervisor

- ioreq is dispatched by vhm driver to related vhm client.

- ioeventfd vhm client traverse the io_range list and find corresponding eventfd.

- trigger the signal to related eventfd.

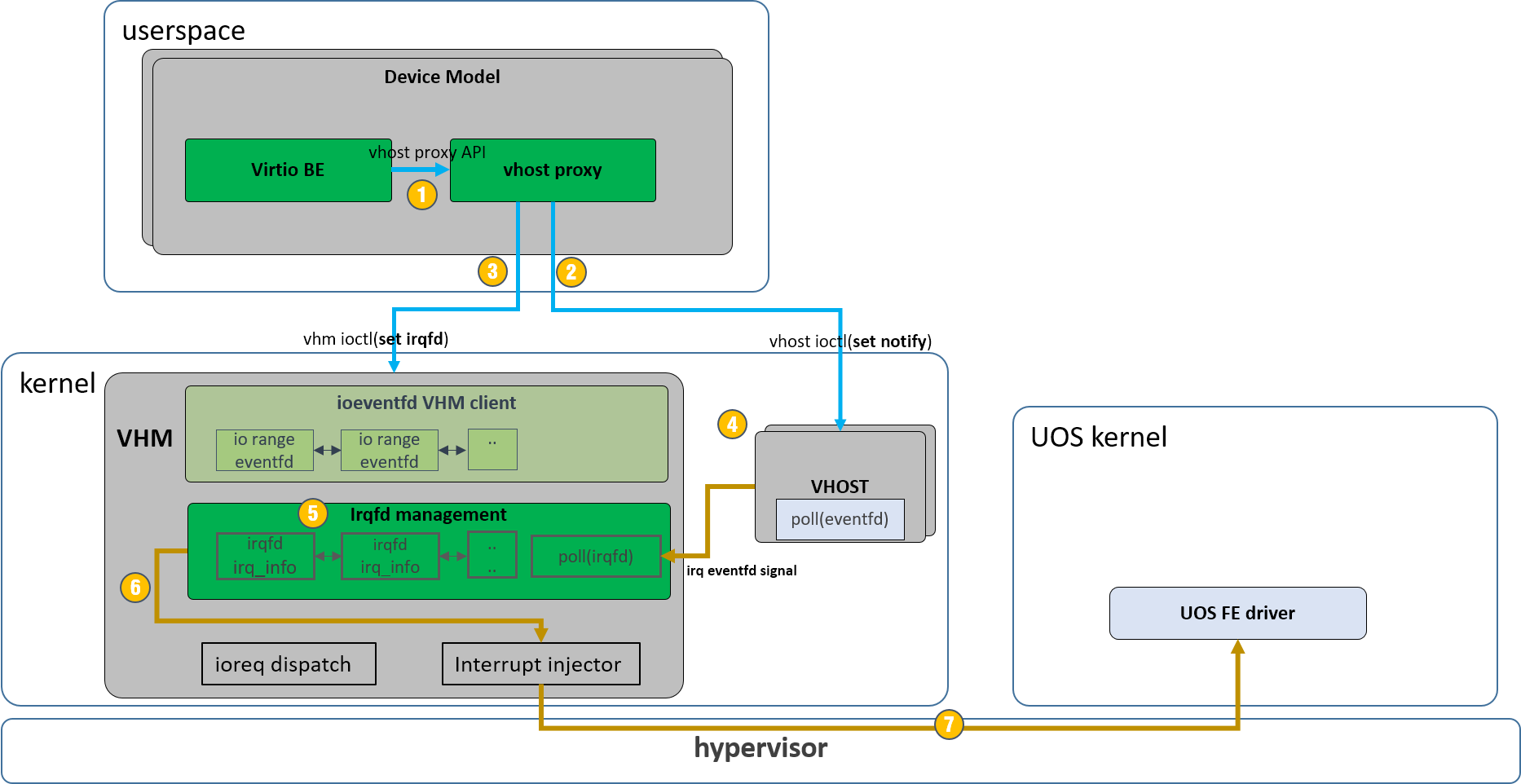

Irqfd implementation¶

The irqfd module is implemented in VHM, and can enhance an registered eventfd to inject an interrupt to a guest OS when the eventfd gets signaled. Figure 122 shows the general flow for irqfd.

Figure 122 irqfd general flow

The workflow can be summarized as:

- vhost device init. Vhost proxy create two eventfd for ioeventfd and irqfd.

- pass irqfd to vhost kernel driver.

- pass irq fd to vhm driver

- vhost device driver triggers irq eventfd signal once related native transfer is completed.

- irqfd related logic traverses the irqfd list to retrieve related irq information.

- irqfd related logic inject an interrupt through vhm interrupt API.

- interrupt is delivered to UOS FE driver through hypervisor.

Virtio APIs¶

This section provides details on the ACRN virtio APIs. As outlined previously, the ACRN virtio APIs can be divided into three groups: DM_APIs, VBS_APIs, and VQ_APIs. The following sections will elaborate on these APIs.

VBS-U Key Data Structures¶

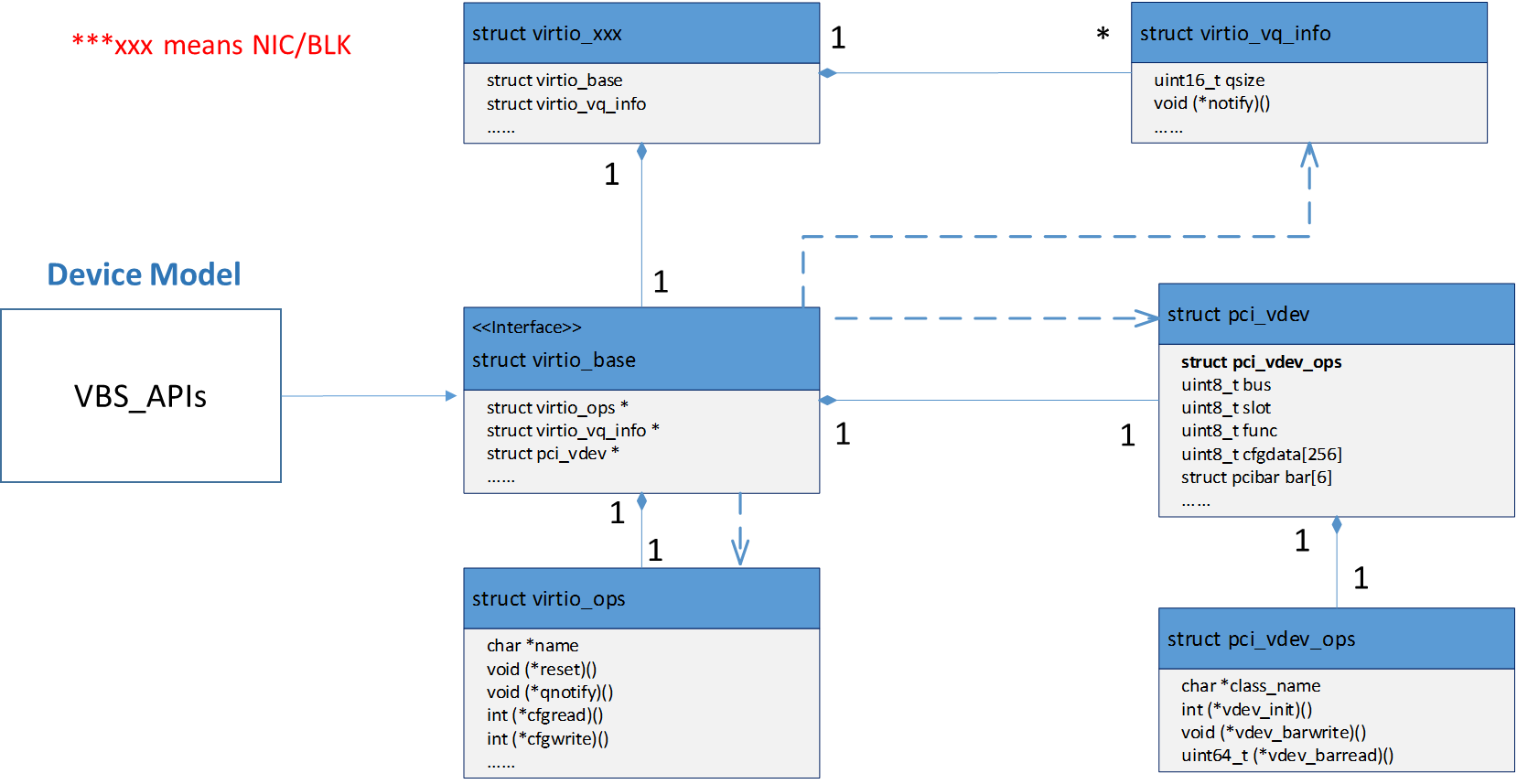

The key data structures for VBS-U are listed as following, and their relationships are shown in Figure 123.

struct pci_virtio_blk- An example virtio device, such as virtio-blk.

struct virtio_common- A common component to any virtio device.

struct virtio_ops- Virtio specific operation functions for this type of virtio device.

struct pci_vdev- Instance of a virtual PCIe device, and any virtio device is a virtual PCIe device.

struct pci_vdev_ops- PCIe device’s operation functions for this type of device.

struct vqueue_info- Instance of a virtqueue.

Each virtio device is a PCIe device. In addition, each virtio device

could have none or multiple virtqueues, depending on the device type.

The struct virtio_common is a key data structure to be manipulated by

DM, and DM finds other key data structures through it. The struct

virtio_ops abstracts a series of virtio callbacks to be provided by

device owner.

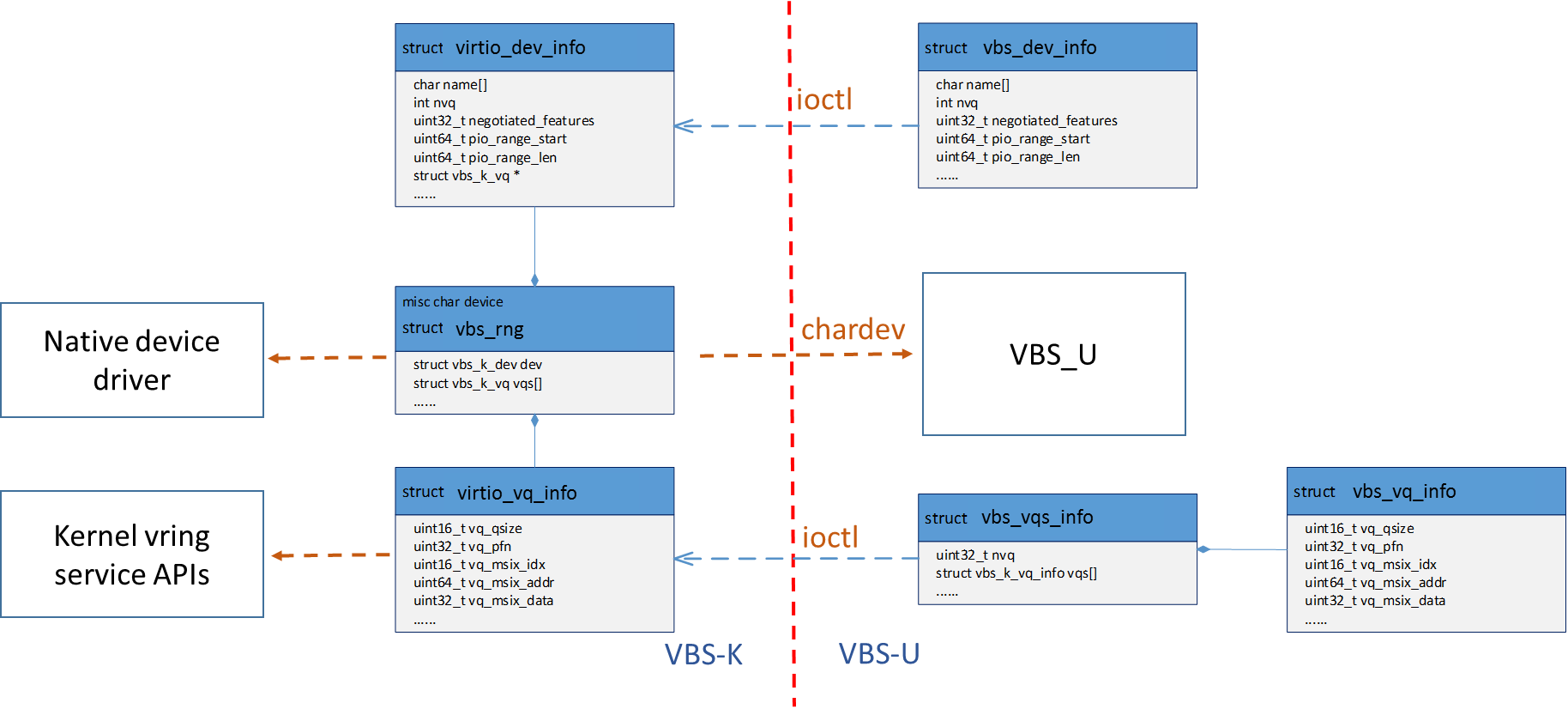

VBS-K Key Data Structures¶

The key data structures for VBS-K are listed as follows, and their relationships are shown in Figure 124.

struct vbs_k_rng- In-kernel VBS-K component handling data plane of a VBS-U virtio device, for example virtio random_num_generator.

struct vbs_k_dev- In-kernel VBS-K component common to all VBS-K.

struct vbs_k_vq- In-kernel VBS-K component to be working with kernel vring service API helpers.

struct vbs_k_dev_inf- Virtio device information to be synchronized from VBS-U to VBS-K kernel module.

struct vbs_k_vq_info- A single virtqueue information to be synchronized from VBS-U to VBS-K kernel module.

struct vbs_k_vqs_info- Virtqueue(s) information, of a virtio device, to be synchronized from VBS-U to VBS-K kernel module.

In VBS-K, the struct vbs_k_xxx represents the in-kernel component handling a virtio device’s data plane. It presents a char device for VBS-U to open and register device status after feature negotiation with the FE driver.

The device status includes negotiated features, number of virtqueues,

interrupt information, and more. All these status will be synchronized

from VBS-U to VBS-K. In VBS-U, the struct vbs_k_dev_info and struct

vbs_k_vqs_info will collect all the information and notify VBS-K through

ioctls. In VBS-K, the struct vbs_k_dev and struct vbs_k_vq, which are

common to all VBS-K modules, are the counterparts to preserve the

related information. The related information is necessary to kernel-land

vring service API helpers.

VHOST Key Data Structures¶

The key data structures for vhost are listed as follows.

-

struct

vhost_dev Public Members

-

struct virtio_base *

base backpointer to virtio_base

-

int

nvqs number of virtqueues

-

int

fd vhost chardev fd

-

int

vq_idx first vq’s index in virtio_vq_info

-

uint64_t

vhost_features supported virtio defined features

-

uint64_t

vhost_ext_features vhost self-defined internal features bits used for communicate between vhost user-space and kernel-space modules

-

uint32_t

busyloop_timeout vq busyloop timeout in us

-

bool

started whether vhost is started

-

struct virtio_base *

-

struct

vhost_vq

DM APIs¶

The DM APIs are exported by DM, and they should be used when realizing BE device drivers on ACRN.

-

void *

paddr_guest2host(struct vmctx *ctx, uintptr_t gaddr, size_t len)¶ Convert guest physical address to host virtual address.

- Return

- NULL on convert failed and host virtual address on successful.

- Parameters

ctx: Pointer to to struct vmctx representing VM context.gaddr: Guest physical address base.len: Guest physical address length.

-

static void

pci_set_cfgdata8(struct pci_vdev *dev, int offset, uint8_t val)¶ Set virtual PCI device’s configuration space in 1 byte width.

- Return

- None

- Parameters

dev: Pointer to struct pci_vdev representing virtual PCI device.offset: Offset in configuration space.val: Value in 1 byte.

-

static void

pci_set_cfgdata16(struct pci_vdev *dev, int offset, uint16_t val)¶ Set virtual PCI device’s configuration space in 2 bytes width.

- Return

- None

- Parameters

dev: Pointer to struct pci_vdev representing virtual PCI device.offset: Offset in configuration space.val: Value in 2 bytes.

-

static void

pci_set_cfgdata32(struct pci_vdev *dev, int offset, uint32_t val)¶ Set virtual PCI device’s configuration space in 4 bytes width.

- Return

- None

- Parameters

dev: Pointer to struct pci_vdev representing virtual PCI device.offset: Offset in configuration space.val: Value in 4 bytes.

-

static uint8_t

pci_get_cfgdata8(struct pci_vdev *dev, int offset)¶ Get virtual PCI device’s configuration space in 1 byte width.

- Return

- The configuration value in 1 byte.

- Parameters

dev: Pointer to struct pci_vdev representing virtual PCI device.offset: Offset in configuration space.

-

static uint16_t

pci_get_cfgdata16(struct pci_vdev *dev, int offset)¶ Get virtual PCI device’s configuration space in 2 byte width.

- Return

- The configuration value in 2 bytes.

- Parameters

dev: Pointer to struct pci_vdev representing virtual PCI device.offset: Offset in configuration space.

-

static uint32_t

pci_get_cfgdata32(struct pci_vdev *dev, int offset)¶ Get virtual PCI device’s configuration space in 4 byte width.

- Return

- The configuration value in 4 bytes.

- Parameters

dev: Pointer to struct pci_vdev representing virtual PCI device.offset: Offset in configuration space.

-

void

pci_lintr_assert(struct pci_vdev *dev)¶ Assert INTx pin of virtual PCI device.

- Return

- None

- Parameters

dev: Pointer to struct pci_vdev representing virtual PCI device.

-

void

pci_lintr_deassert(struct pci_vdev *dev)¶ Deassert INTx pin of virtual PCI device.

- Return

- None

- Parameters

dev: Pointer to struct pci_vdev representing virtual PCI device.

-

void

pci_generate_msi(struct pci_vdev *dev, int index)¶ Generate a MSI interrupt to guest.

- Return

- None

- Parameters

dev: Pointer to struct pci_vdev representing virtual PCI device.index: Message data index.

-

void

pci_generate_msix(struct pci_vdev *dev, int index)¶ Generate a MSI-X interrupt to guest.

- Return

- None

- Parameters

dev: Pointer to struct pci_vdev representing virtual PCI device.index: MSIs table entry index.

VBS APIs¶

The VBS APIs are exported by VBS related modules, including VBS, DM, and SOS kernel modules. They can be classified into VBS-U and VBS-K APIs listed as follows.

VBS-U APIs¶

These APIs provided by VBS-U are callbacks to be registered to DM, and the virtio framework within DM will invoke them appropriately.

-

struct

virtio_ops Virtio specific operation functions for this type of virtio device.

Public Members

-

const char *

name name of driver (for diagnostics)

-

int

nvq number of virtual queues

-

size_t

cfgsize size of dev-specific config regs

-

void (*

reset)(void *) called on virtual device reset

-

void (*

qnotify)(void *, struct virtio_vq_info *) called on QNOTIFY if no VQ notify

-

int (*

cfgread)(void *, int, int, uint32_t *) to read config regs

-

int (*

cfgwrite)(void *, int, int, uint32_t) to write config regs

-

void (*

apply_features)(void *, uint64_t) to apply negotiated features

-

void (*

set_status)(void *, uint64_t) called to set device status

-

const char *

-

uint64_t

virtio_pci_read(struct vmctx *ctx, int vcpu, struct pci_vdev *dev, int baridx, uint64_t offset, int size) Handle PCI configuration space reads.

Handle virtio standard register reads, and dispatch other reads to actual virtio device driver.

- Return

- register value.

- Parameters

ctx: Pointer to struct vmctx representing VM context.vcpu: VCPU ID.dev: Pointer to struct pci_vdev which emulates a PCI device.baridx: Which BAR[0..5] to use.offset: Register offset in bytes within a BAR region.size: Access range in bytes.

-

void

virtio_pci_write(struct vmctx *ctx, int vcpu, struct pci_vdev *dev, int baridx, uint64_t offset, int size, uint64_t value) Handle PCI configuration space writes.

Handle virtio standard register writes, and dispatch other writes to actual virtio device driver.

- Return

- None

- Parameters

ctx: Pointer to struct vmctx representing VM context.vcpu: VCPU ID.dev: Pointer to struct pci_vdev which emulates a PCI device.baridx: Which BAR[0..5] to use.offset: Register offset in bytes within a BAR region.size: Access range in bytes.value: Data value to be written into register.

-

void

virtio_dev_error(struct virtio_base *base) Indicate the device has experienced an error.

This is called when the device has experienced an error from which it cannot re-cover. DEVICE_NEEDS_RESET is set to the device status register and a config change intr is sent to the guest driver.

- Return

- None

- Parameters

base: Pointer to struct virtio_base.

-

int

virtio_interrupt_init(struct virtio_base *base, int use_msix) Initialize MSI-X vector capabilities if we’re to use MSI-X, or MSI capabilities if not.

Wrapper function for virtio_intr_init() for cases we directly use BAR 1 for MSI-X capabilities.

- Return

- 0 on success and non-zero on fail.

- Parameters

base: Pointer to struct virtio_base.use_msix: If using MSI-X.

-

void

virtio_linkup(struct virtio_base *base, struct virtio_ops *vops, void *pci_virtio_dev, struct pci_vdev *dev, struct virtio_vq_info *queues, int backend_type) Link a virtio_base to its constants, the virtio device, and the PCI emulation.

- Return

- None

- Parameters

base: Pointer to struct virtio_base.vops: Pointer to struct virtio_ops.pci_virtio_dev: Pointer to instance of certain virtio device.dev: Pointer to struct pci_vdev which emulates a PCI device.queues: Pointer to struct virtio_vq_info, normally an array.backend_type: can be VBSU, VBSK or VHOST

-

void

virtio_reset_dev(struct virtio_base *base) Reset device (device-wide).

This erases all queues, i.e., all the queues become invalid. But we don’t wipe out the internal pointers, by just clearing the VQ_ALLOC flag.

It resets negotiated features to “none”. If MSI-X is enabled, this also resets all the vectors to NO_VECTOR.

- Return

- None

- Parameters

base: Pointer to struct virtio_base.

-

void

virtio_set_io_bar(struct virtio_base *base, int barnum) Set I/O BAR (usually 0) to map PCI config registers.

- Return

- None

- Parameters

base: Pointer to struct virtio_base.barnum: Which BAR[0..5] to use.

-

int

virtio_set_modern_bar(struct virtio_base *base, bool use_notify_pio) Set modern BAR (usually 4) to map PCI config registers.

Set modern MMIO BAR (usually 4) to map virtio 1.0 capabilities and optional set modern PIO BAR (usually 2) to map notify capability. This interface is only valid for modern virtio.

- Return

- 0 on success and non-zero on fail.

- Parameters

base: Pointer to struct virtio_base.use_notify_pio: Whether use pio for notify capability.

-

int

virtio_pci_modern_cfgread(struct vmctx *ctx, int vcpu, struct pci_vdev *dev, int coff, int bytes, uint32_t *rv) Handle PCI configuration space reads.

Handle virtio PCI configuration space reads. Only the specific registers that need speical operation are handled in this callback. For others just fallback to pci core. This interface is only valid for virtio modern.

- Return

- 0 on handled and non-zero on non-handled.

- Parameters

ctx: Pointer to struct vmctx representing VM context.vcpu: VCPU ID.dev: Pointer to struct pci_vdev which emulates a PCI device.coff: Register offset in bytes within PCI configuration space.bytes: Access range in bytes.rv: The value returned as read.

-

int

virtio_pci_modern_cfgwrite(struct vmctx *ctx, int vcpu, struct pci_vdev *dev, int coff, int bytes, uint32_t val) Handle PCI configuration space writes.

Handle virtio PCI configuration space writes. Only the specific registers that need speical operation are handled in this callback. For others just fallback to pci core. This interface is only valid for virtio modern.

- Return

- 0 on handled and non-zero on non-handled.

- Parameters

ctx: Pointer to struct vmctx representing VM context.vcpu: VCPU ID.dev: Pointer to struct pci_vdev which emulates a PCI device.coff: Register offset in bytes within PCI configuration space.bytes: Access range in bytes.val: The value to write.

-

static void

virtio_config_changed(struct virtio_base *vb) Deliver an config changed interrupt to guest.

MSI-X or a generic MSI interrupt with config changed event.

- Return

- None

- Parameters

vb: Pointer to struct virtio_base.

VBS-K APIs¶

The VBS-K APIs are exported by VBS-K related modules. Users could use the following APIs to implement their VBS-K modules.

APIs provided by DM¶

-

int

vbs_kernel_reset(int fd) Virtio kernel module reset.

- Return

- 0 on OK and non-zero on error.

- Parameters

fd: File descriptor representing virtio backend in kernel module.

-

int

vbs_kernel_start(int fd, struct vbs_dev_info *dev, struct vbs_vqs_info *vqs) Virtio kernel module start.

- Return

- 0 on OK and non-zero on error.

- Parameters

fd: File descriptor representing virtio backend in kernel module.dev: Pointer to struct vbs_dev_info.vqs: Pointer to struct vbs_vqs_info.

-

int

vbs_kernel_stop(int fd) Virtio kernel module stop.

- Return

- 0 on OK and non-zero on error.

- Parameters

fd: File descriptor representing virtio backend in kernel module.

APIs provided by VBS-K modules in service OS¶

-

long

virtio_dev_init(struct virtio_dev_info * dev, struct virtio_vq_info * vqs, int nvq)¶ Initialize VBS-K device data structures

Parameters

struct virtio_dev_info * dev- Pointer to VBS-K device data struct

struct virtio_vq_info * vqs- Pointer to VBS-K virtqueue data struct, normally in an array

int nvq- Number of virtqueues this device has

Return

0 on success, <0 on error

-

long

virtio_dev_ioctl(struct virtio_dev_info * dev, unsigned int ioctl, void __user * argp)¶ VBS-K device’s common ioctl routine

Parameters

struct virtio_dev_info * dev- Pointer to VBS-K device data struct

unsigned int ioctl- Command of ioctl to device

void __user * argp- Data from user space

Return

0 on success, <0 on error

-

long

virtio_vqs_ioctl(struct virtio_dev_info * dev, unsigned int ioctl, void __user * argp)¶ VBS-K vq’s common ioctl routine

Parameters

struct virtio_dev_info * dev- Pointer to VBS-K device data struct

unsigned int ioctl- Command of ioctl to virtqueue

void __user * argp- Data from user space

Return

0 on success, <0 on error

-

long

virtio_dev_register(struct virtio_dev_info * dev)¶ register a VBS-K device to VHM

Parameters

struct virtio_dev_info * dev- Pointer to VBS-K device data struct

Description

Each VBS-K device will be registered as a VHM client, with the information including “kick” register location, callback, etc.

Return

0 on success, <0 on error

-

long

virtio_dev_deregister(struct virtio_dev_info * dev)¶ unregister a VBS-K device from VHM

Parameters

struct virtio_dev_info * dev- Pointer to VBS-K device data struct

Description

Destroy the client corresponding to the VBS-K device specified.

Return

0 on success, <0 on error

-

int

virtio_vqs_index_get(struct virtio_dev_info * dev, unsigned long * ioreqs_map, int * vqs_index, int max_vqs_index)¶ get virtqueue indexes that frontend kicks

Parameters

struct virtio_dev_info * dev- Pointer to VBS-K device data struct

unsigned long * ioreqs_map- requests bitmap need to handle, provided by VHM

int * vqs_index- array to store the vq indexes

int max_vqs_index- size of vqs_index array

Description

This API is normally called in the VBS-K device’s callback function, to get value write to the “kick” register from frontend.

Return

Number of vq request

-

long

virtio_dev_reset(struct virtio_dev_info * dev)¶ reset a VBS-K device

Parameters

struct virtio_dev_info * dev- Pointer to VBS-K device data struct

Return

0 on success, <0 on error

VHOST APIS¶

APIs provided by DM¶

-

int

vhost_dev_init(struct vhost_dev *vdev, struct virtio_base *base, int fd, int vq_idx, uint64_t vhost_features, uint64_t vhost_ext_features, uint32_t busyloop_timeout) vhost_dev initialization.

This interface is called to initialize the vhost_dev. It must be called before the actual feature negotiation with the guest OS starts.

- Return

- 0 on success and -1 on failure.

- Parameters

vdev: Pointer to struct vhost_dev.base: Pointer to struct virtio_base.fd: fd of the vhost chardev.vq_idx: The first virtqueue which would be used by this vhost dev.vhost_features: Subset of vhost features which would be enabled.vhost_ext_features: Specific vhost internal features to be enabled.busyloop_timeout: Busy loop timeout in us.

-

int

vhost_dev_deinit(struct vhost_dev *vdev) vhost_dev cleanup.

This interface is called to cleanup the vhost_dev.

- Return

- 0 on success and -1 on failure.

- Parameters

vdev: Pointer to struct vhost_dev.

Linux vhost IOCTLs¶

#define VHOST_GET_FEATURES _IOR(VHOST_VIRTIO, 0x00, __u64)- This IOCTL is used to get the supported feature flags by vhost kernel driver.

#define VHOST_SET_FEATURES _IOW(VHOST_VIRTIO, 0x00, __u64)- This IOCTL is used to set the supported feature flags to vhost kernel driver.

#define VHOST_SET_OWNER _IO(VHOST_VIRTIO, 0x01)- This IOCTL is used to set current process as the exclusive owner of the vhost char device. It must be called before any other vhost commands.

#define VHOST_RESET_OWNER _IO(VHOST_VIRTIO, 0x02)- This IOCTL is used to give up the ownership of the vhost char device.

#define VHOST_SET_MEM_TABLE _IOW(VHOST_VIRTIO, 0x03, struct vhost_memory)- This IOCTL is used to convey the guest OS memory layout to vhost kernel driver.

#define VHOST_SET_VRING_NUM _IOW(VHOST_VIRTIO, 0x10, struct vhost_vring_state)- This IOCTL is used to set the number of descriptors in virtio ring. It cannot be modified while the virtio ring is running.

#define VHOST_SET_VRING_ADDR _IOW(VHOST_VIRTIO, 0x11, struct vhost_vring_addr)- This IOCTL is used to set the address of the virtio ring.

#define VHOST_SET_VRING_BASE _IOW(VHOST_VIRTIO, 0x12, struct vhost_vring_state)- This IOCTL is used to set the base value where virtqueue looks for available descriptors.

#define VHOST_GET_VRING_BASE _IOWR(VHOST_VIRTIO, 0x12, struct vhost_vring_state)- This IOCTL is used to get the base value where virtqueue looks for available descriptors.

#define VHOST_SET_VRING_KICK _IOW(VHOST_VIRTIO, 0x20, struct vhost_vring_file)- This IOCTL is used to set the eventfd on which vhost can poll for guest virtqueue kicks.

#define VHOST_SET_VRING_CALL _IOW(VHOST_VIRTIO, 0x21, struct vhost_vring_file)- This IOCTL is used to set the eventfd which is used by vhost do inject virtual interrupt.

VHM eventfd IOCTLs¶

-

struct

acrn_ioeventfd¶

#define IC_EVENT_IOEVENTFD _IC_ID(IC_ID, IC_ID_EVENT_BASE + 0x00)- This IOCTL is used to register/unregister ioeventfd with appropriate address, length and data value.

-

struct

acrn_irqfd¶ Public Members

-

int32_t

fd¶ file descriptor of the eventfd of this irqfd

-

uint32_t

flags¶ flag for irqfd ioctl

-

struct acrn_msi_entry

msi¶ MSI interrupt to be injected

-

int32_t

#define IC_EVENT_IRQFD _IC_ID(IC_ID, IC_ID_EVENT_BASE + 0x01)- This IOCTL is used to register/unregister irqfd with appropriate MSI information.

VQ APIs¶

The virtqueue APIs, or VQ APIs, are used by a BE device driver to access the virtqueues shared by the FE driver. The VQ APIs abstract the details of virtqueues so that users don’t need to worry about the data structures within the virtqueues. In addition, the VQ APIs are designed to be identical between VBS-U and VBS-K, so that users don’t need to learn different APIs when implementing BE drivers based on VBS-U and VBS-K.

-

static void

vq_interrupt(struct virtio_base *vb, struct virtio_vq_info *vq) Deliver an interrupt to guest on the given virtqueue.

The interrupt could be MSI-X or a generic MSI interrupt.

- Return

- None

- Parameters

vb: Pointer to struct virtio_base.vq: Pointer to struct virtio_vq_info.

-

int

vq_getchain(struct virtio_vq_info *vq, uint16_t *pidx, struct iovec *iov, int n_iov, uint16_t *flags) Walk through the chain of descriptors involved in a request and put them into a given iov[] array.

- Return

- number of descriptors.

- Parameters

vq: Pointer to struct virtio_vq_info.pidx: Pointer to available ring position.iov: Pointer to iov[] array prepared by caller.n_iov: Size of iov[] array.flags: Pointer to a uint16_t array which will contain flag of each descriptor.

-

void

vq_retchain(struct virtio_vq_info *vq) Return the currently-first request chain back to the available ring.

- Return

- None

- Parameters

vq: Pointer to struct virtio_vq_info.

-

void

vq_relchain(struct virtio_vq_info *vq, uint16_t idx, uint32_t iolen) Return specified request chain to the guest, setting its I/O length to the provided value.

- Return

- None

- Parameters

vq: Pointer to struct virtio_vq_info.idx: Pointer to available ring position, returned by vq_getchain().iolen: Number of data bytes to be returned to frontend.

-

void

vq_endchains(struct virtio_vq_info *vq, int used_all_avail) Driver has finished processing “available” chains and calling vq_relchain on each one.

If driver used all the available chains, used_all_avail need to be set to 1.

- Return

- None

- Parameters

vq: Pointer to struct virtio_vq_info.used_all_avail: Flag indicating if driver used all available chains.

Below is an example showing a typical logic of how a BE driver handles requests from a FE driver.

static void BE_callback(struct pci_virtio_xxx *pv, struct vqueue_info *vq ) {

while (vq_has_descs(vq)) {

vq_getchain(vq, &idx, &iov, 1, NULL);

/* handle requests in iov */

request_handle_proc();

/* Release this chain and handle more */

vq_relchain(vq, idx, len);

}

/* Generate interrupt if appropriate. 1 means ring empty \*/

vq_endchains(vq, 1);

}

Supported Virtio Devices¶

All the BE virtio drivers are implemented using the ACRN virtio APIs, and the FE drivers are reusing the standard Linux FE virtio drivers. For the devices with FE drivers available in the Linux kernel, they should use standard virtio Vendor ID/Device ID and Subsystem Vendor ID/Subsystem Device ID. For other devices within ACRN, their temporary IDs are listed in the following table.

| virtio device | Vendor ID | Device ID | Subvendor ID | Subdevice ID |

| RPMB | 0x8086 | 0x8601 | 0x8086 | 0xFFFF |

| HECI | 0x8086 | 0x8602 | 0x8086 | 0xFFFE |

| audio | 0x8086 | 0x8603 | 0x8086 | 0xFFFD |

| IPU | 0x8086 | 0x8604 | 0x8086 | 0xFFFC |

| TSN/AVB | 0x8086 | 0x8605 | 0x8086 | 0xFFFB |

| hyper_dmabuf | 0x8086 | 0x8606 | 0x8086 | 0xFFFA |

| HDCP | 0x8086 | 0x8607 | 0x8086 | 0xFFF9 |

| COREU | 0x8086 | 0x8608 | 0x8086 | 0xFFF8 |

The following sections introduce the status of virtio devices currently supported in ACRN.