Memory Management High-Level Design¶

This document describes memory management for the ACRN hypervisor.

Overview¶

The hypervisor (HV) virtualizes real physical memory so an unmodified OS (such as Linux or Android) that is running in a virtual machine can manage its own contiguous physical memory. The HV uses virtual-processor identifiers (VPIDs) and the extended page-table mechanism (EPT) to translate a guest-physical address into a host-physical address. The HV enables EPT and VPID hardware virtualization features, establishes EPT page tables for Service and User VMs, and provides EPT page tables operation interfaces to others.

In the ACRN hypervisor system, there are a few different memory spaces to consider. From the hypervisor’s point of view:

Host Physical Address (HPA): the native physical address space.

Host Virtual Address (HVA): the native virtual address space based on an MMU. A page table is used to translate from HVA to HPA spaces.

From the Guest OS running on a hypervisor:

Guest Physical Address (GPA): the guest physical address space from a virtual machine. GPA to HPA transition is usually based on an MMU-like hardware module (EPT in X86), and is associated with a page table.

Guest Virtual Address (GVA): the guest virtual address space from a virtual machine based on a vMMU.

Figure 94 ACRN Memory Mapping Overview¶

Figure 94 provides an overview of the ACRN system memory mapping, showing:

GVA to GPA mapping based on vMMU on a vCPU in a VM

GPA to HPA mapping based on EPT for a VM in the hypervisor

HVA to HPA mapping based on MMU in the hypervisor

This document illustrates the memory management infrastructure for the ACRN hypervisor and how it handles the different memory space views inside the hypervisor and from a VM:

How ACRN hypervisor manages host memory (HPA/HVA)

How ACRN hypervisor manages the Service VM guest memory (HPA/GPA)

How ACRN hypervisor and the Service VM Device Model (DM) manage the User VM guest memory (HPA/GPA)

Hypervisor Physical Memory Management¶

In ACRN, the HV initializes MMU page tables to manage all physical

memory and then switches to the new MMU page tables. After MMU page

tables are initialized at the platform initialization stage, no updates

are made for MMU page tables except when set_paging_supervisor/nx/x is

called.

However, the memory region updated by set_paging_supervisor/nx/x

must not be accessed by the ACRN hypervisor in advance because access could

make mapping in the TLB and there is no TLB flush mechanism for the ACRN HV memory.

Hypervisor Physical Memory Layout - E820¶

The ACRN hypervisor is the primary owner for managing system memory. Typically, the boot firmware (e.g., EFI) passes the platform physical memory layout - E820 table to the hypervisor. The ACRN hypervisor does its memory management based on this table using 4-level paging.

The BIOS/bootloader firmware (e.g., EFI) passes the E820 table through a multiboot protocol. This table contains the original memory layout for the platform.

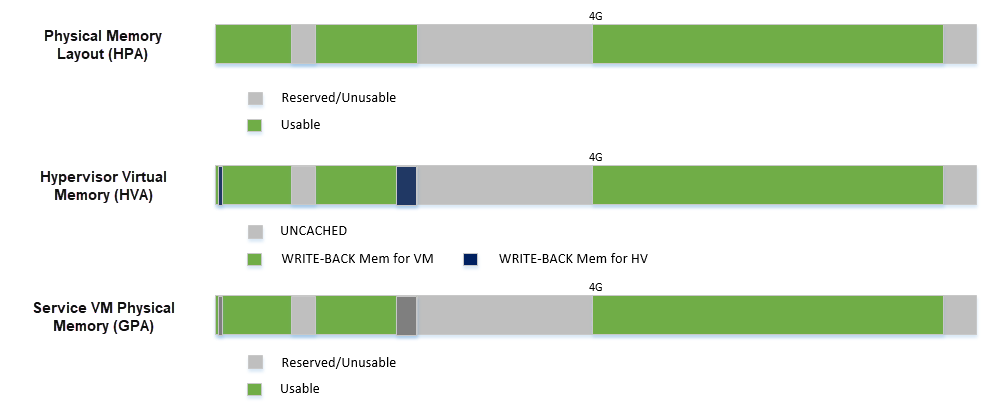

Figure 95 Physical Memory Layout Example¶

Figure 95 is an example of the physical memory layout based on a simple platform E820 table.

Hypervisor Memory Initialization¶

The ACRN hypervisor runs in paging mode. After the bootstrap

processor (BSP) gets the platform E820 table, the BSP creates its MMU page

table based on it. This is done by the function init_paging().

After the application processor (AP) receives the IPI CPU startup

interrupt, it uses the MMU page tables created by the BSP. In order to bring

the memory access rights into effect, some other APIs are provided:

enable_paging will enable IA32_EFER.NXE and CR0.WP, enable_smep will

enable CR4.SMEP, and enable_smap will enable CR4.SMAP.

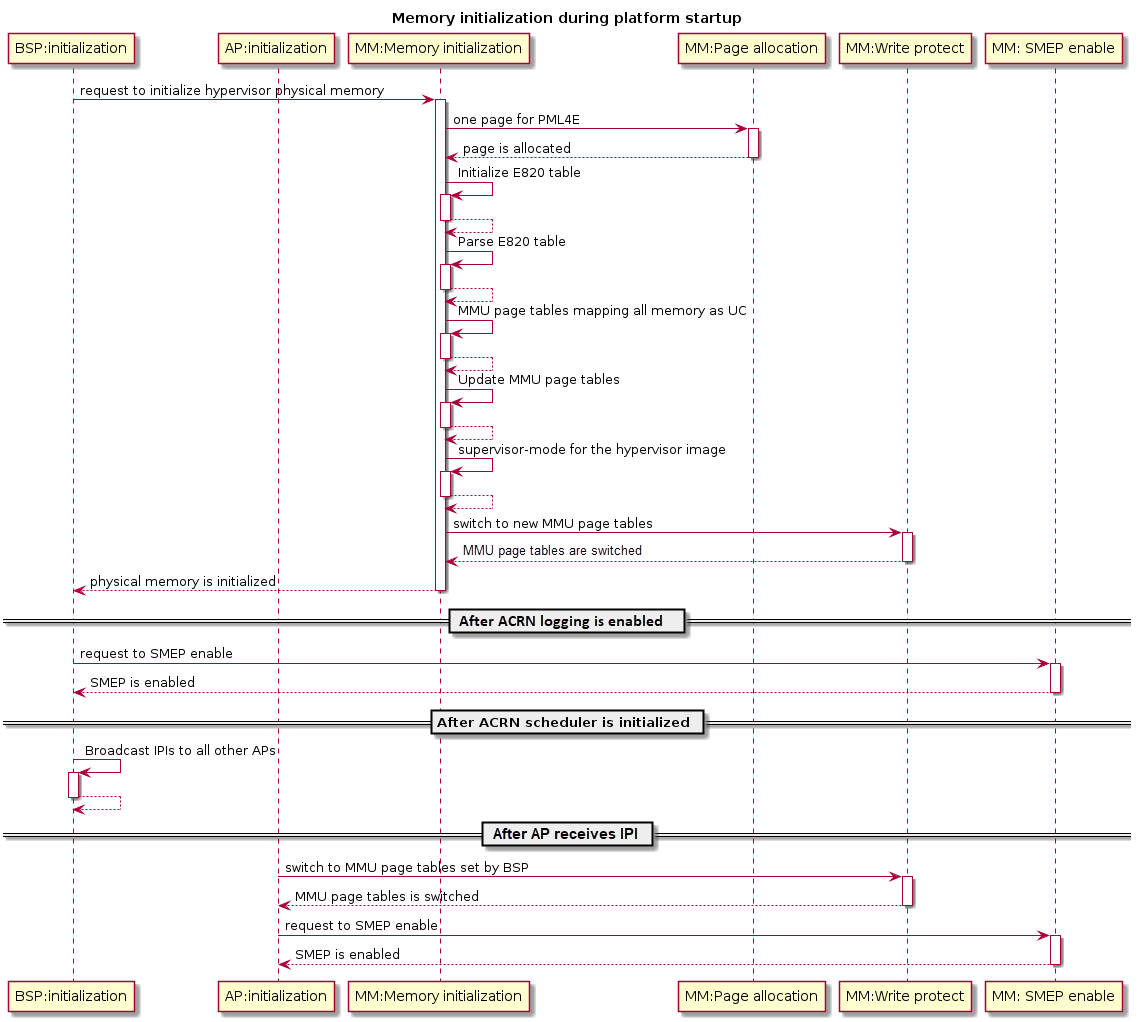

Figure 96 describes the hypervisor memory initialization for the BSP

and APs.

Figure 96 Hypervisor Memory Initialization¶

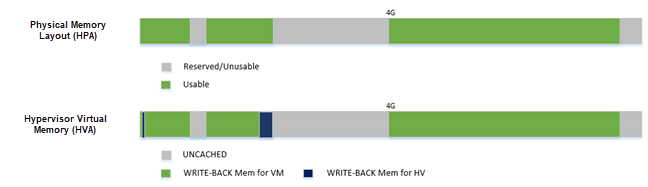

The following memory mapping policy used is:

Identical mapping (ACRN hypervisor memory could be relocatable in the future)

Map all address spaces with UNCACHED type, read/write, user and execute-disable access right

Remap [0, low32_max_ram) regions to WRITE-BACK type

Remap [4G, high64_max_ram) regions to WRITE-BACK type

Set the paging-structure entries’ U/S flag to supervisor-mode for hypervisor-owned memory (exclude the memory reserved for trusty)

Remove ‘NX’ bit for pages that contain the hv code section

Figure 97 Hypervisor Virtual Memory Layout¶

Figure 97 above shows:

Hypervisor has a view of and can access all system memory

Hypervisor has UNCACHED MMIO/PCI hole reserved for devices such as LAPIC/IOAPIC accessing

Hypervisor has its own memory with WRITE-BACK cache type for its code/data (< 1M part is for secondary CPU reset code)

The hypervisor should use minimum memory pages to map from virtual address space into the physical address space. So ACRN only supports map linear addresses to 2-MByte pages, or 1-GByte pages; it doesn’t support map linear addresses to 4-KByte pages.

If 1GB hugepage can be used for virtual address space mapping, the corresponding PDPT entry shall be set for this 1GB hugepage.

If 1GB hugepage can’t be used for virtual address space mapping and 2MB hugepage can be used, the corresponding PDT entry shall be set for this 2MB hugepage.

If the memory type or access rights of a page are updated, or some virtual address space is deleted, it will lead to splitting of the corresponding page. The hypervisor will still keep using minimum memory pages to map from the virtual address space into the physical address space.

Memory Pages Pool Functions¶

Memory pages pool functions provide static management of one 4KB page-size memory block for each page level for each VM or HV; it is used by the hypervisor to do memory mapping.

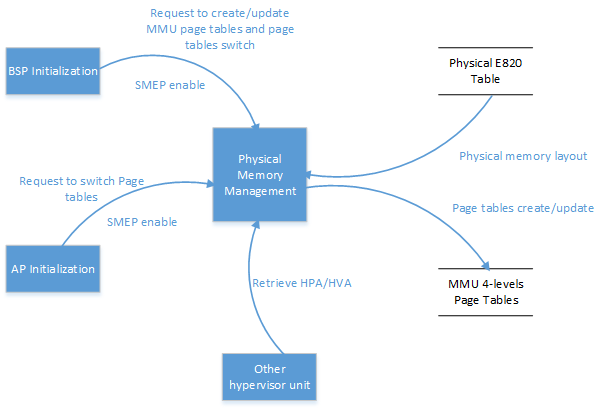

Data Flow Design¶

The physical memory management unit provides MMU 4-level page tables creation and services updates, MMU page tables switching service, SMEP enable service, and HPA/HVA retrieving service to other units. Figure 98 shows the data flow diagram of physical memory management.

Figure 98 Data Flow of Hypervisor Physical Memory Management¶

Interfaces Design¶

MMU Initialization¶

-

void enable_smep(void)¶

Supervisor-mode execution prevention (SMEP) enable.

- Returns

None

-

void enable_smap(void)¶

Supervisor-mode Access Prevention (SMAP) enable.

- Returns

None

-

void enable_paging(void)¶

MMU paging enable.

- Returns

None

-

void init_paging(void)¶

MMU page tables initialization.

- Returns

None

Address Space Translation¶

-

static inline void *hpa2hva_early(uint64_t x)¶

Translate host-physical address to host-virtual address.

- Parameters

x – [in] The specified host-physical address

- Returns

The translated host-virtual address

-

static inline uint64_t hva2hpa_early(void *x)¶

Translate host-virtual address to host-physical address.

- Parameters

x – [in] The specified host-virtual address

- Returns

The translated host-physical address

-

static inline void *hpa2hva(uint64_t x)¶

Translate host-physical address to host-virtual address.

- Parameters

x – [in] The specified host-physical address

- Returns

The translated host-virtual address

-

static inline uint64_t hva2hpa(const void *x)¶

Translate host-virtual address to host-physical address.

- Parameters

x – [in] The specified host-virtual address

- Returns

The translated host-physical address

Hypervisor Memory Virtualization¶

The hypervisor provides a contiguous region of physical memory for the Service VM and each User VM. It also guarantees that the Service and User VMs can not access the code and internal data in the hypervisor, and each User VM can not access the code and internal data of the Service VM and other User VMs.

The hypervisor:

enables EPT and VPID hardware virtualization features

establishes EPT page tables for the Service and User VMs

provides EPT page tables operations services

virtualizes MTRR for Service and User VMs

provides VPID operations services

provides services for address spaces translation between the GPA and HPA

provides services for data transfer between the hypervisor and the virtual machine

Memory Virtualization Capability Checking¶

In the hypervisor, memory virtualization provides an EPT/VPID capability checking service and an EPT hugepage supporting checking service. Before the HV enables memory virtualization and uses the EPT hugepage, these services need to be invoked by other units.

Data Transfer Between Different Address Spaces¶

In ACRN, different memory space management is used in the hypervisor, Service VM, and User VM to achieve spatial isolation. Between memory spaces, there are different kinds of data transfer, such as when a Service/User VM may hypercall to request hypervisor services which includes data transferring, or when the hypervisor does instruction emulation: the HV needs to access the guest instruction pointer register to fetch guest instruction data.

Access GPA From Hypervisor¶

When the hypervisor needs to access the GPA for data transfer, the caller from a guest must make sure this memory range’s GPA is continuous. But for HPA in the hypervisor, it could be discontinuous (especially for a User VM under hugetlb allocation mechanism). For example, a 4M GPA range may map to 2 different 2M huge host-physical pages. The ACRN hypervisor must take care of this kind of data transfer by doing EPT page walking based on its HPA.

Access GVA From Hypervisor¶

When the hypervisor needs to access GVA for data transfer, it’s likely both GPA and HPA could be address discontinuous. The ACRN hypervisor must watch for this kind of data transfer and handle it by doing page walking based on both its GPA and HPA.

EPT Page Tables Operations¶

The hypervisor should use a minimum of memory pages to map from guest-physical address (GPA) space into host-physical address (HPA) space.

If 1GB hugepage can be used for GPA space mapping, the corresponding EPT PDPT entry shall be set for this 1GB hugepage.

If 1GB hugepage can’t be used for GPA space mapping and 2MB hugepage can be used, the corresponding EPT PDT entry shall be set for this 2MB hugepage.

If both 1GB hugepage and 2MB hugepage can’t be used for GPA space mapping, the corresponding EPT PT entry shall be set.

If memory type or access rights of a page are updated or some GPA space is deleted, it will lead to the corresponding EPT page being split. The hypervisor should still keep to using minimum EPT pages to map from GPA space into HPA space.

The hypervisor provides an EPT guest-physical mappings adding service, EPT guest-physical mappings modifying/deleting service, and EPT guest-physical mappings invalidation service.

Virtual MTRR¶

In ACRN, the hypervisor only virtualizes MTRRs fixed range (0~1MB). The HV sets MTRRs of the fixed range as Write-Back for a User VM, and the Service VM reads native MTRRs of the fixed range set by BIOS.

If the guest physical address is not in the fixed range (0~1MB), the hypervisor uses the default memory type in the MTRR (Write-Back).

When the guest disables MTRRs, the HV sets the guest address memory type as UC.

If the guest physical address is in the fixed range (0~1MB), the HV sets the memory type according to the fixed virtual MTRRs.

When the guest enables MTRRs, MTRRs have no effect on the memory type used for access to GPA. The HV first intercepts MTRR MSR registers access through MSR access VM exit and updates the EPT memory type field in EPT PTE according to the memory type selected by MTRRs. This combines with the PAT entry in the PAT MSR (which is determined by the PAT, PCD, and PWT bits from the guest paging structures) to determine the effective memory type.

VPID Operations¶

Virtual-processor identifier (VPID) is a hardware feature to optimize TLB management. When VPID is enabled, hardware will add a tag for the TLB of a logical processor and cache information for multiple linear-address spaces. VMX transitions may retain cached information and the logical processor switches to a different address space, avoiding unnecessary TLB flushes.

In ACRN, an unique VPID must be allocated for each virtual CPU when a virtual CPU is created. The logical processor invalidates linear mappings and combined mapping associated with all VPIDs (except VPID 0000H), and with all PCIDs when the logical processor launches the virtual CPU. The logical processor invalidates all linear mapping and combined mappings associated with the specified VPID when the interrupt pending request handling needs to invalidate cached mapping of the specified VPID.

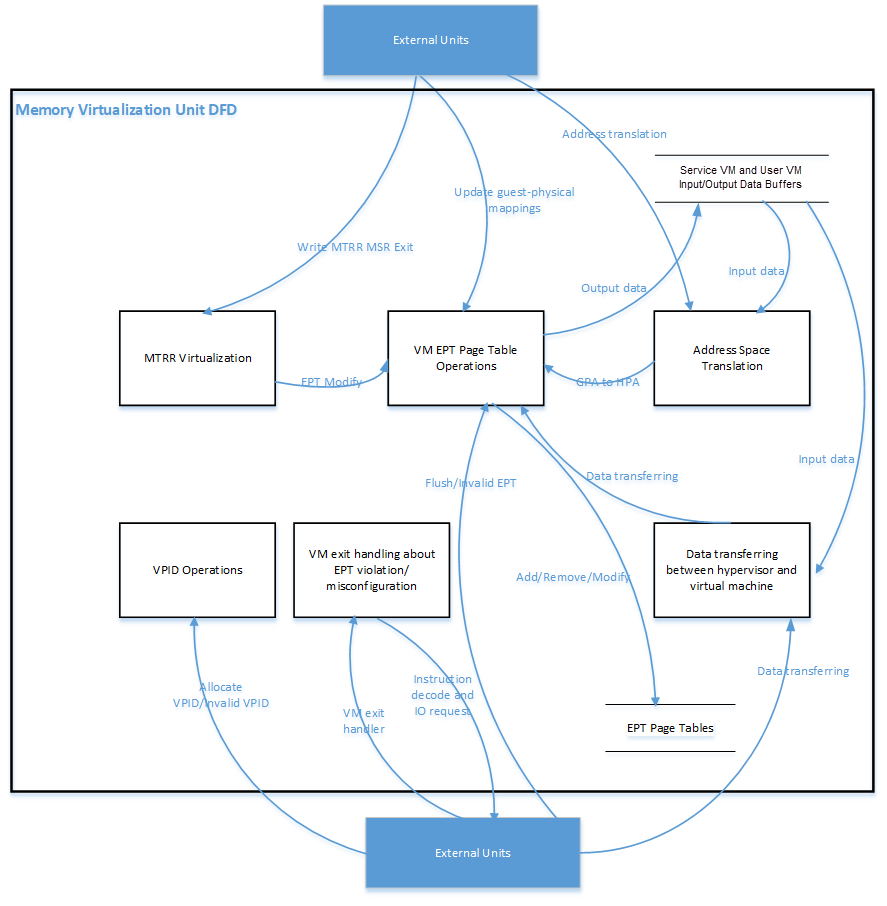

Data Flow Design¶

The memory virtualization unit includes address space translation functions, data transferring functions, VM EPT operations functions, VPID operations functions, VM exit hanging about EPT violation and EPT misconfiguration, and MTRR virtualization functions. This unit handles guest-physical mapping updates by creating or updating related EPT page tables. It virtualizes MTRR for guest OS by updating related EPT page tables. It handles address translation from GPA to HPA by walking EPT page tables. It copies data from VM into the HV or from the HV to VM by walking guest MMU page tables and EPT page tables. It provides services to allocate VPID for each virtual CPU and TLB invalidation related VPID. It handles VM exit about EPT violation and EPT misconfiguration. The following Figure 99 describes the data flow diagram of the memory virtualization unit.

Figure 99 Data Flow of Hypervisor Memory Virtualization¶

Data Structure Design¶

EPT Memory Type Definition:

-

EPT_MT_SHIFT¶

EPT memory type is specified in bits 5:3 of the EPT paging-structure entry.

-

EPT_UNCACHED¶

EPT memory type is uncacheable.

-

EPT_WC¶

EPT memory type is write combining.

-

EPT_WT¶

EPT memory type is write through.

-

EPT_WP¶

EPT memory type is write protected.

-

EPT_WB¶

EPT memory type is write back.

-

EPT_IGNORE_PAT¶

Ignore PAT memory type.

EPT Memory Access Right Definition:

-

EPT_RD¶

EPT memory access right is read-only.

-

EPT_WR¶

EPT memory access right is read/write.

-

EPT_EXE¶

EPT memory access right is executable.

-

EPT_RWX¶

EPT memory access right is read/write and executable.

Interfaces Design¶

The memory virtualization unit interacts with external units through VM exit and APIs.

VM Exit About EPT¶

There are two VM exit handlers for EPT violation and EPT misconfiguration in the hypervisor. EPT page tables are always configured correctly for the Service and User VMs. If an EPT misconfiguration is detected, a fatal error is reported by the HV. The hypervisor uses EPT violation to intercept MMIO access to do device emulation. EPT violation handling data flow is described in the Instruction Emulation.

Memory Virtualization APIs¶

Here is a list of major memory related APIs in the HV:

EPT/VPID Capability Checking¶

Data Transferring Between Hypervisor and VM¶

-

int32_t copy_from_gpa(struct acrn_vm *vm, void *h_ptr, uint64_t gpa, uint32_t size)¶

Copy data from VM GPA space to HV address space.

- Preconditions

Caller(Guest) should make sure gpa is continuous.

gpa from hypercall input which from kernel stack is gpa continuous, not support kernel stack from vmap

some other gpa from hypercall parameters, HSM should make sure it’s continuous

- Preconditions

Pointer vm is non-NULL

- Parameters

vm – [in] The pointer that points to VM data structure

h_ptr – [in] The pointer that points the start HV address of HV memory region which data is stored in

gpa – [out] The start GPA address of GPA memory region which data will be copied into

size – [in] The size (bytes) of GPA memory region which data is stored in

-

int32_t copy_to_gpa(struct acrn_vm *vm, void *h_ptr, uint64_t gpa, uint32_t size)¶

Copy data from HV address space to VM GPA space.

- Preconditions

Caller(Guest) should make sure gpa is continuous.

gpa from hypercall input which from kernel stack is gpa continuous, not support kernel stack from vmap

some other gpa from hypercall parameters, HSM should make sure it’s continuous

- Preconditions

Pointer vm is non-NULL

- Parameters

vm – [in] The pointer that points to VM data structure

h_ptr – [in] The pointer that points the start HV address of HV memory region which data is stored in

gpa – [out] The start GPA address of GPA memory region which data will be copied into

size – [in] The size (bytes) of GPA memory region which data will be copied into

-

int32_t copy_from_gva(struct acrn_vcpu *vcpu, void *h_ptr, uint64_t gva, uint32_t size, uint32_t *err_code, uint64_t *fault_addr)¶

Copy data from VM GVA space to HV address space.

- Parameters

vcpu – [in] The pointer that points to vcpu data structure

h_ptr – [out] The pointer that returns the start HV address of HV memory region which data will be copied to

gva – [in] The start GVA address of GVA memory region which data is stored in

size – [in] The size (bytes) of GVA memory region which data is stored in

err_code – [out] The page fault flags

fault_addr – [out] The GVA address that causes a page fault

Address Space Translation¶

-

uint64_t gpa2hpa(struct acrn_vm *vm, uint64_t gpa)¶

Translating from guest-physical address to host-physcial address.

- Parameters

vm – [in] the pointer that points to VM data structure

gpa – [in] the specified guest-physical address

- Return values

hpa – the host physical address mapping to the

gpaINVALID_HPA – the HPA of parameter gpa is unmapping

-

uint64_t service_vm_hpa2gpa(uint64_t hpa)¶

Translating from host-physical address to guest-physical address for Service VM.

- Preconditions

: the gpa and hpa are identical mapping in Service VM.

- Parameters

hpa – [in] the specified host-physical address

EPT¶

-

void ept_add_mr(struct acrn_vm *vm, uint64_t *pml4_page, uint64_t hpa, uint64_t gpa, uint64_t size, uint64_t prot_orig)¶

Guest-physical memory region mapping.

- Parameters

vm – [in] the pointer that points to VM data structure

pml4_page – [in] The physical address of The EPTP

hpa – [in] The specified start host physical address of host physical memory region that GPA will be mapped

gpa – [in] The specified start guest physical address of guest physical memory region that needs to be mapped

size – [in] The size of guest physical memory region that needs to be mapped

prot_orig – [in] The specified memory access right and memory type

- Returns

None

-

void ept_del_mr(struct acrn_vm *vm, uint64_t *pml4_page, uint64_t gpa, uint64_t size)¶

Guest-physical memory region unmapping.

- Preconditions

[gpa,gpa+size) has been mapped into host physical memory region

- Parameters

vm – [in] the pointer that points to VM data structure

pml4_page – [in] The physical address of The EPTP

gpa – [in] The specified start guest physical address of guest physical memory region whoes mapping needs to be deleted

size – [in] The size of guest physical memory region

- Returns

None

-

void ept_modify_mr(struct acrn_vm *vm, uint64_t *pml4_page, uint64_t gpa, uint64_t size, uint64_t prot_set, uint64_t prot_clr)¶

Guest-physical memory page access right or memory type updating.

- Parameters

vm – [in] the pointer that points to VM data structure

pml4_page – [in] The physical address of The EPTP

gpa – [in] The specified start guest physical address of guest physical memory region whoes mapping needs to be updated

size – [in] The size of guest physical memory region

prot_set – [in] The specified memory access right and memory type that will be set

prot_clr – [in] The specified memory access right and memory type that will be cleared

- Returns

None

-

void destroy_ept(struct acrn_vm *vm)¶

EPT page tables destroy.

- Parameters

vm – [inout] the pointer that points to VM data structure

- Returns

None

-

void invept(const void *eptp)¶

Guest-physical mappings and combined mappings invalidation.

- Parameters

eptp – [in] the pointer that points the eptp

- Returns

None

-

int32_t ept_misconfig_vmexit_handler(struct acrn_vcpu *vcpu)¶

EPT misconfiguration handling.

- Parameters

vcpu – [in] the pointer that points to vcpu data structure

- Return values

-EINVAL – fail to handle the EPT misconfig

0 – Success to handle the EPT misconfig

-

void ept_flush_leaf_page(uint64_t *pge, uint64_t size)¶

Flush address space from the page entry.

- Parameters

pge – [in] the pointer that points to the page entry

size – [in] the size of the page

- Returns

None

-

void *get_eptp(struct acrn_vm *vm)¶

Get EPT pointer of the vm.

- Parameters

vm – [in] the pointer that points to VM data structure

- Return values

If – the current context of vm is SECURE_WORLD, return EPT pointer of secure world, otherwise return EPT pointer of normal world.

-

void walk_ept_table(struct acrn_vm *vm, pge_handler cb)¶

Walking through EPT table.

- Parameters

vm – [in] the pointer that points to VM data structure

cb – [in] the pointer that points to walk_ept_table callback, the callback will be invoked when getting a present page entry from EPT, and the callback could get the page entry and page size parameters.

- Returns

None

Virtual MTRR¶

-

void init_vmtrr(struct acrn_vcpu *vcpu)¶

Virtual MTRR initialization.

- Parameters

vcpu – [inout] The pointer that points VCPU data structure

- Returns

None

-

void write_vmtrr(struct acrn_vcpu *vcpu, uint32_t msr, uint64_t value)¶

Virtual MTRR MSR write.

- Parameters

vcpu – [inout] The pointer that points VCPU data structure

msr – [in] Virtual MTRR MSR Address

value – [in] The value that will be writen into virtual MTRR MSR

- Returns

None

-

uint64_t read_vmtrr(const struct acrn_vcpu *vcpu, uint32_t msr)¶

Virtual MTRR MSR read.

- Parameters

vcpu – [in] The pointer that points VCPU data structure

msr – [in] Virtual MTRR MSR Address

- Returns

The specified virtual MTRR MSR value

VPID¶

-

void flush_vpid_single(uint16_t vpid)¶

Specified signle VPID flush.

- Parameters

vpid – [in] the specified VPID

- Returns

None

-

void flush_vpid_global(void)¶

All VPID flush.

- Returns

None

Service VM Memory Management¶

After the ACRN hypervisor starts, it creates the Service VM as its first VM. The Service VM runs all the native device drivers, manages the hardware devices, and provides I/O mediation to post-launched User VMs. The Service VM is in charge of the memory allocation for post-launched User VMs as well.

The ACRN hypervisor passes the whole system memory access (except its own part) to the Service VM. The Service VM must be able to access all of the system memory except the hypervisor part.

Guest Physical Memory Layout - E820¶

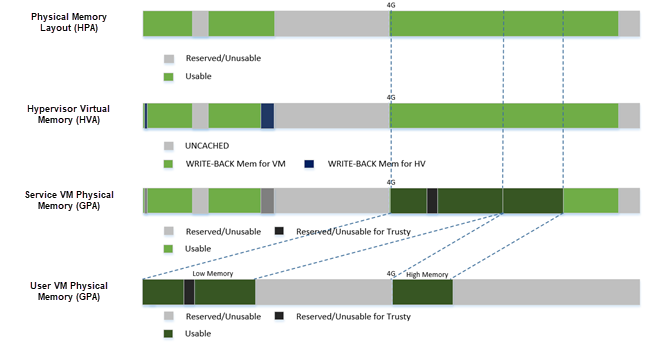

The ACRN hypervisor passes the original E820 table to the Service VM after filtering out its own part. From the Service VM’s view, it sees almost all the system memory as shown here:

Figure 100 Service VM Physical Memory Layout¶

Host to Guest Mapping¶

The ACRN hypervisor creates the Service VM’s guest (GPA) to host (HPA) mapping

(EPT mapping) through the function prepare_service_vm_memmap()

when it creates the Service VM. It follows these rules:

Identical mapping

Map all memory ranges with UNCACHED type

Remap RAM entries in E820 (revised) with WRITE-BACK type

Unmap ACRN hypervisor memory range

Unmap all platform EPC resources

Unmap ACRN hypervisor emulated vLAPIC/vIOAPIC MMIO range

The guest to host mapping is static for the Service VM; it will not change after the Service VM begins running except the PCI device BAR address mapping could be re-programmed by the Service VM. EPT violation is serving for vLAPIC/vIOAPIC’s emulation or PCI MSI-X table BAR’s emulation in the hypervisor for Service VM.

Trusty¶

For an Android User VM, there is a secure world named trusty world, whose memory must be secured by the ACRN hypervisor and must not be accessible by the Service VM and User VM normal world.

Figure 101 User VM Physical Memory Layout with Trusty¶